C++17 parallel algorithms and HIPSTDPAR

The C++17 standard added the concept of parallel algorithms to the pre-existing C++ Standard Library. The parallel version of algorithms like std::transform maintain the same signature as the regular serial version, except for the addition of an extra parameter specifying the execution policy to use. This flexibility allows users that are already using the C++ Standard Library algorithms to take advantage of multi-core architectures by just introducing minimal changes to their code.

Starting with ROCm 6.1, the parallel algorithms seamlessly offload to AMD accelerators via HIPSTDPAR, as long as the user is willing to add an extra compiler flag or two.

Whilst the functionality introduced by HIPSTDPAR is available for all AMD GPUs (including consumer cards), this blog post focuses on the AMD CDNA2™ and CDNA3™ architectures (MI200 and MI300 series cards, respectively) using ROCm 6.1. As a code example, we focus on the Travelling Salesman Problem (TSP) solver available here.

The travelling salesman problem

The travelling salesman problem tries to answer the following question: “Given a list of cities and the distances between each pair of cities, what is the shortest possible route that visits each city exactly once and returns to the origin city?”. This problem is particularly hard to solve (NP-hard) due to exponential complexity; adding an extra city to the list causes an exponential growth in the number of combinations to check. Solving this problem by just enumerating all possible combinations and checking each one of them is computationally prohibitive for problems with more than 17 or 18 cities. For real world applications, advanced methods are used (cutting planes and branch and bound techniques) but for the purposes of this blog we focus on a embarrassingly parallel implementation of the brute-force approach.

The TSP solver we look at relies on the following function to check the various permutations of cities and pick the one with the lowest cost/distance. Here is a detailed implementation that does not make use of any parallelism:

template<int N>

route_cost find_best_route(int const* distances)

{

return std::transform_reduce(

counting_iterator(0),

counting_iterator(factorial(N)),

route_cost(),

[](route_cost x, route_cost y) { return x.cost < y.cost ? x : y; },

[=](int64_t i) {

int cost = 0;

route_iterator<N> it(i);

// first city visited

int from = it.first();

// visited all other cities in the chosen route

// and compute cost

while (!it.done())

{

int to = it.next();

cost += distances[to + N*from];

from = to;

}

// update best_route -> reduction

return route_cost(i, cost);

});

}

The std::transform_reduce algorithm performs two operations:

-

a transformation (equivalent to a map operation) implemented by the lambda function passed as final argument;

-

a reduction operation, expressed as lambda function as fourth argument.

The function above runs through all elements from 0 to N!, each of which expresses a particular permutation of all cities, computes the cost of the particular path, and returns an instance of route_cost object that includes the id of the particular path and the cost associated with it. At the end, a reduction is performed by comparing the cost of the various paths and selecting the one with lowest cost.

On an AMD Zen4 processor, this serial code takes about 11.52 seconds to compute the best path for a TSP instance involving twelve cities. The same code takes about 156 seconds for a TSP instance involving thirteen cities. This is a normal consequence of the exponential growth of the search space imposed by the TSP.

Execution policies and HIPSTDPAR

Since each of the N! paths are independent, computing their individual cost is an embarrassingly parallel operation. C++17 allows developers to easily parallelize the previous code by just passing an execution policy as the first argument of the algorithm invocation. The C++17 standard defines three possible execution policies:

-

std::execution::sequenced_policyand the corresponding policy object to pass as argumentstd::execution::seq -

std::execution::parallel_policyand the corresponding policy object to pass as argumentstd::execution::par -

std::execution::parallel_unsequenced_policyand the corresponding policy object to pass as argumentstd::execution::par_unseq

Execution policies allow the user to convey information to the implementation about the invariants that user code shall enforce / maintain, thus allowing the latter to possibly adopt more favourable / performant execution.

std::execution::sequenced_policy

The sequenced policy constrains the implementation to perform all operations on the thread that invoked the algorithm, inhibiting possible parallel execution. All operations are indeterminately sequenced within the caller thread, which implies subsequent invocations of the same algorithm, within the same thread, can have their operations sequenced differently.

std::execution::parallel_policy

The parallel policy allows the implementation to employ parallel execution. Operations may be performed on the thread that invoked the algorithm or on threads created by the standard library implementation. All operations are indeterminately sequenced within a thread, for all threads used to perform the computation described by the algorithm invocation. Furthermore, there are no ordering guarantees provided for the element access function invocations themselves. Compared to the sequenced policy, additional constraints are imposed on the various components used by the algorithm. In particular, operations on iterators, values, and callable objects, as well as their transitive closure, must be data race free.

In the previous example, it is possible to parallelize the find_best_route function by passing as first extra argument the std::execution:par policy as follows:

By making this simple change, the code will now run on all CPU cores available. On the CPU portion of a MI300A, equipped with 48 Zen4 logical cores, solving an instance of TSP with 12 cities takes about 0.34 seconds. This parallel run is almost 34x faster compared to the 11.52 seconds needed by the serial version! For an instance of TSP with thirteen cities the parallel version takes about 5 seconds. Finally, for a bigger problem involving fourteen cites, the 48 Zen4 logical cores take about 77 seconds.

std::execution::parallel_unsequenced_policy

This policy guarantees the most restrictive requirements are met by user provided code. An algorithm invoked with this policy may perform the steps in unordered and unsequenced ways with respect to one another. This means that the various operations can be interleaved with each other on the same thread. Also any given operation may start on a thread and end on a different thread. When specifying the parallel unsequenced policy, the user guarantees that no operations that entail calling a function that synchronizes-with another function are employed. In practice, this means that user code does not do any memory allocation / deallocation, only relies on lock-free specializations of std::atomic, and does not rely on synchronization primitives such as std::mutex.

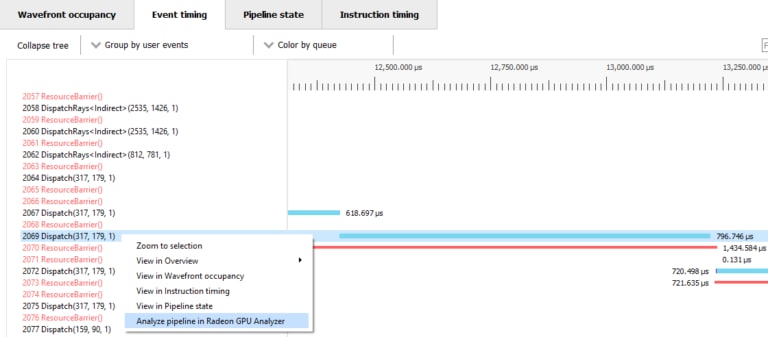

This policy is currently the only one that can be chosen to offload parallelism to AMD accelerators. To trigger the GPU offload of all parallel algorithms invoked with the parallel unsequenced policy, the --hipstdpar flag must be passed at compile time. Furthermore, for GPU targets other than the current default (gfx906), the user must also pass --offload-arch= specifying which GPU is being used.

On MI300A, by simply switching policy and recompiling with the aforementioned flags, the execution time for an instance of TSP with thirteen cities goes down to 0.5 seconds. When using fourteen cities, the use of the GPU portion of MI300A brings down the execution time to 4.8 seconds from the 77 seconds needed by the parallel version running on 48 Zen4 logical cores. And because everybody loves a good table, let us conclude this section by summarising the progression from sequenced execution on the CPU to parallel unsequenced execution offloaded to the accelerator:

|

14-city TSP |

Timing (s) |

|---|---|

|

|

2337 |

|

|

77 |

|

|

75 |

|

|

4.8 |

TeaLeaf

A more complex example showing the use and performance of HIPSTDPAR is TeaLeaf. The code is a C++ implementation of the TeaLeaf heat conduction mini-app from the University of Bristol, UK. Multiple implementations illustrate various parallel programming paradigms, including HIP and parallelised standard algorithms. This allows us to make a fair performance comparison between an optimized, HIP-based implementation and a HIPSTDPAR one. For the purpose of this test, we selected the tea_bm_5.in benchmark, comprising of a 2D grid of 4000×4000 cells and 10 time steps.

For the HIPSTDPAR version, on a MI300A card, the following output is obtained:

As for the HIP version, it performs as follows:

The performance difference in-between the two versions stems from the overhead associated with handling the initial page-in of non-resident memory. To “even things out”, the HIP version can be adjusted to use hipMallocManaged() as well, instead of hipMalloc(). This particular configuration is already available in the HIP version of TeaLeaf and it can be enabled by passing a simple flag at compile time. The following is the output for the HIP version of TeaLeaf when using hipMallocManaged() and XNACK for all GPU allocations.

As expected, the performance of the HIP version when introducing hipMallocManaged() is comparable with the one observed for the HIPSTDPAR version. In closing, we will note that ongoing work is expected to reduce the overhead, thus bringing the offloaded version closer to the HIP one.

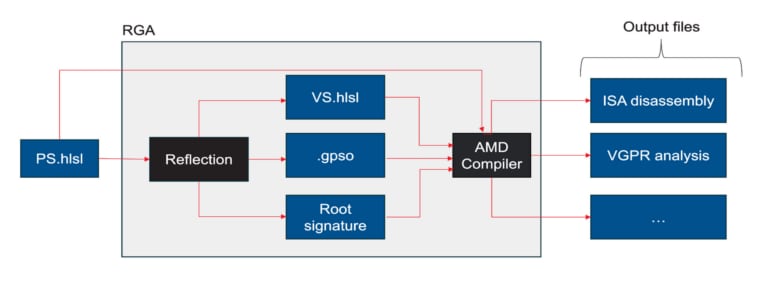

Nuts and bolts of HIPSTDPAR

The ability to offload C++ Standard Parallel algorithm execution to the GPU relies on the interaction between the LLVM compiler, HIPSTDPAR, and rocThrust. Starting from ROCm 6.1, the LLVM compiler used to compile regular HIP codes will be able to forward invocations of standard algorithms which take the parallel_unsequenced_policy execution policy to the HIPSTDPAR header-only library when the --hipstdpar flag is passed. The header-only library is in charge of mapping the parallel algorithms used by C++ Standard Library into the equivalent rocThrust algorithm invocation. This very simple design allows for a low overhead implementation of the offloading for parallel standard algorithms. A natural question to ask at this point is: “computation is nice but what about the memory it operates on?”. By default, HIPSTDPAR assumes that the underlying system is HMM (Heterogeneous Memory Management)-enabled, and that page migration is possible via the handling of retry-able page-faults implemented atop XNACK (e.g., export HSA_XNACK=1). This particular mode is referred to as HMM Mode.

When these two requirements are satisfied, code offloaded to the GPU (implemented via rocThrust) triggers the page migration mechanism and data will automatically migrate from host to device. On MI300A, although physical migration is neither needed nor useful, handling page faults via XNACK is still necessary. For more details about page migration please refer to the following blog post.

On systems without HMM / XNACK we can still use HIPSTDPAR by passing an extra compilation flag: --hipstdpar-interpose-alloc. This flag will instruct the compiler to replace all dynamic memory allocations with compatible hipManagedMemory allocations implemented in the HIPSTDPAR header-only library. For example, if the application being compiled, or one of its transitive inclusions, allocates free store memory via operator new, that call will be replaced with a call to __hipstdpar_operator_new. By looking at the implementation of that function in the HIPSTDPAR library we see that the actual allocation is performed via the hipMallocManaged() function. By doing so on a non HMM-enabled system, host memory is pinned and directly accessible by the GPU without requiring any page-fault driven migration to the GPU memory. This particular mode is referred to as Interposition Mode.

Restrictions

For both HMM and Interposition modes, the following restrictions apply:

-

Pointers to function, and all associated features, such as e.g. dynamic polymorphism, cannot be used (directly or transitively) by the user provided callable passed to an algorithm invocation;

-

Global / namespace scope /

static/threadstorage duration variables cannot be used (directly or transitively) in name by the user provided callable;-

When executing in HMM Mode they can be used in address e.g.:

namespace { int foo = 42; } bool never(const vector<int>& v) { return any_of(execution::par_unseq, cbegin(v), cend(v), [](auto&& x) { return x == foo; }); } bool only_in_hmm_mode(const vector<int>& v) { return any_of(execution::par_unseq, cbegin(v), cend(v), [p = &foo](auto&& x) { return x == *p; }); }

-

-

Only algorithms that are invoked with the

parallel_unsequenced_policyare candidates for offload; -

Only algorithms that are invoked with iterator arguments that model

random_access_iteratorare candidates for offload; -

Exceptions cannot be used by the user provided callable;

-

Dynamic memory allocation (e.g.

operator new) cannot be used by the user provided callable; -

Selective offload is not possible i.e. it is not possible to indicate that only some algorithms invoked with the

parallel_unsequenced_policyare to be executed on the accelerator.

In addition to the above, using Interposition Mode imposes the following additional restrictions:

-

All code that is expected to interoperate has to be recompiled with the

--hipstdpar-interpose-allocflag i.e. it is not safe to compose libraries that have been independently compiled; -

automatic storage duration (i.e. stack allocated) variables cannot be used (directly or transitively) by the user provided callable e.g.

But why?

After what has been something of a whirlwind tour, it is not unreasonable to ask “but how does this benefit me, the C++ developer?”. The goal of HIPSTDPAR is to allow any C++ developer that is employing standard algorithms to leverage GPU acceleration with no cognitive overload. The application developer can remain firmly planted in the Standard C++ world, without having to step into the brave new world of GPU specific languages such as e.g. HIP or SYCL. Fortunately for us, our particular example allows for some limited, quantitative insight into just how close we got to this goal. The Tealeaf author has implemented the solver via multiple programming interfaces which means that we can use the cloc tool to count the lines of code needed by the tsp.cpp implementation:

|

Programming Interface |

LoC |

|---|---|

|

145 |

|

|

142 |

|

|

116 |

|

|

Standard C++ Serial |

112 |

|

Standard C++ Parallel Algorithms |

107 |

|

169 |

It is apparent that using compiler flag driven offload, as enabled by HIPSTDPAR, saves on a considerable amount or typing – up to 57% versus SYCL, for example. This enables a more natural journey towards GPU accelerated execution. As a result, the programmer can focus on the algorithm / problem solving, at least initially, and discover generic algorithmic optimisations that are profitable for the GPU, without having to dive head-first into GPU “arcana”.

TL;DR, just tell me how to go fast

Initially, HIPSTDPAR is officially supported on Linux, with Windows support forthcoming at a future date. Starting from an environment that has been set up for ROCm, using the package manager to install the hipstdpar package will, in general, bring in all the required goodness. Additionally, at the time of writing, a dependency on TBB exists, as a consequence of standard library implementation details (see e.g. Note 3). Therefore, it is necessary to install the system’s TBB package (e.g. libtbb-dev on Ubuntu). Armed thusly, and assuming that we have a main.cpp file which uses some standard algorithms to solve a given problem, the compiler driver invocation:

transparently offloads all algorithm invocations that use the std::execution::parallel_unsequenced_policy execution policy, if we are targeting a GPU compatible with the gfx906 ISA (i.e. Vega20). Otherwise, we also have to specify the target for offload:

Conclusion

In this post, we provided a high level overview of the ROCm support for offloading C++ Standard Parallel Algorithms, aiming to show how existing C++ developers can leverage GPU acceleration without having to adopt any new, GPU specific, language (e.g., HIP) or directives (e.g., OpenMP).

We believe that this standard, extremely accessible, way of exploiting hardware parallelism will be particularly beneficial for applications targeting MI300A accelerators, where the CPU and the GPU share the same pool of HBM. Although not demonstrated today, the combination of the APU architecture and HIPSTDPAR can enable fine-grained cooperation between CPU and GPU, which become true peers, accessible via a uniform programming interface.

For an in-depth look at the compiler side of HIPSTDPAR support, the interested reader should peruse the associated AMD-LLVM documentation.

If you have any questions please reach out to us on GitHub Discussions.