AMD Schola

AMD Schola is a library for developing reinforcement learning (RL) agents in Unreal Engine and training with your favorite python-based RL Frameworks.

What if you could integrate cutting-edge reinforcement learning (RL) algorithms directly into your gaming projects - without lengthy workarounds or rebuilding environments from scratch?

AMD Schola is here to make that a reality. By connecting popular open-source RL libraries (written in Python) with the visual and physics capabilities of Unreal Engine, Schola empowers AI researchers and game developers alike to push the boundaries of intelligent gameplay.

AI researchers often develop sophisticated RL algorithms in isolated simulation environments or libraries, only to encounter challenges porting these solutions into actual games.

Meanwhile, game developers aiming to add intelligent behaviors to non-player characters (NPCs) usually must reinvent the wheel - creating new AI logic from scratch or attempting complex integrations with minimal documentation.

It slows down innovation: promising research remains confined to academia.

It adds complexity: developers must replicate algorithms or shoehorn models trained elsewhere into existing game mechanics, leading to suboptimal results.

Imagine a stealth game with NPC guards, currently developers must strike a difficult balance between having guards always know exactly where the player is (making the game difficult, and unrealistic) or have little awareness at all (making it too easy).

With Schola, these guards can learn realistic behaviors - like methodically searching for the player or intelligently responding to suspicious events. This flexible toolkit for AI development takes gameplay from predictable to immersive.

More engaging NPCs: NPCs adapt to player actions in real time, leading to dynamic gameplay that keeps players engaged.

Faster prototyping: With Schola, you can experiment with a variety of RL-driven behaviors, testing them quickly in the Unreal Editor.

Direct Unreal integration: Take advantage of Unreal Engine’s advanced physics, rendering, and asset libraries.

Streamlined workflows: No more hacking together prototypes and porting them. Train, test, and refine RL algorithms in the same environment you’ll use for final deployment.

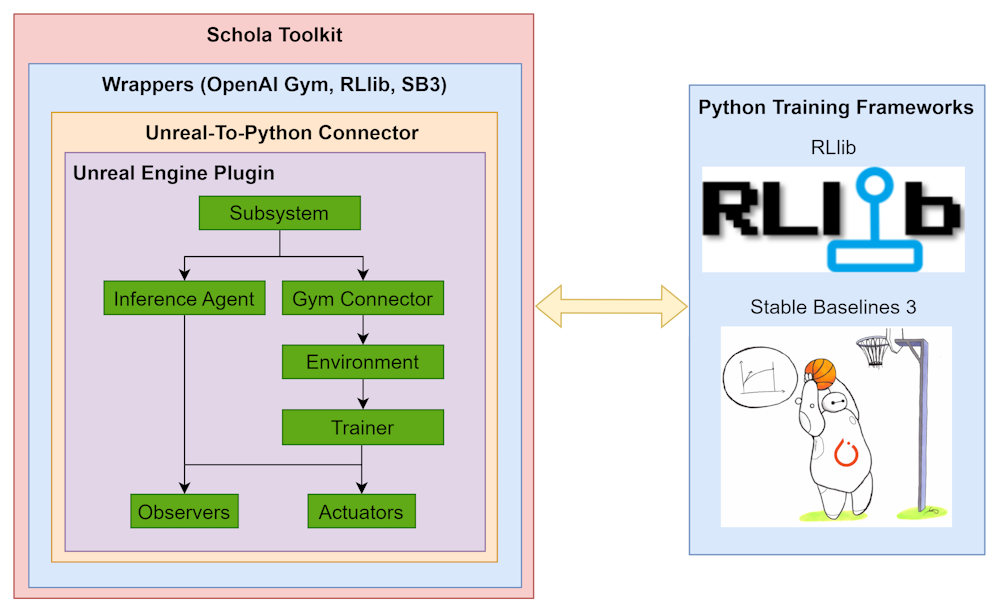

Schola supports two popular open-source RL libraries (RLlib and Stable-Baselines3) and the Gymnasium API, making it highly accessible for anyone already familiar with these tools. It provides:

Multiple RL library integrations: Seamlessly switch between or combine your favorite RL frameworks without learning a new API.

Immediate Unreal Engine compatibility: All training, testing, and debugging can happen directly within Unreal’s rich ecosystem - no environment rebuilding necessary.

Easy setup and documentation: Our online API docs walk you through the process of installing and using Schola, so you can start training your models faster.

If you want a sneak peek of Schola in action, check out our examples. You’ll see how RL models can create more natural behaviors and actions.

The technical design diagram below details how Schola communicates with both Unreal Engine and the RL libraries.

Ready to dive in? We offer several ways to explore Schola, each tailored to fit different levels of interest or expertise:

Install the plugin

Try our example environments

Explore our documentation

Contribute and engage

By merging the strengths of open-source RL tools and Unreal Engine, Schola opens new doors for next-level AI in gaming. Researchers can focus on refining algorithms, while developers can easily implement them for more immersive and adaptive gameplay.

Stay tuned for new features, guides, and examples to keep advancing your ability to build immersive AI!

Install the plugins, explore the examples, and witness the difference that a learning-based approach can make.

Join the community on GitHub and help shape the future of AI-driven NPCs.

We can’t wait to see the groundbreaking ideas and intelligent game worlds you’ll create with Schola.