Basic

The Basic environment features an agent that can move in the X-dimension and receives a small reward for going five steps in one direction and a bigger reward for going in the opposite direction.

MazeSolver: Using Raycasts

The MazeSolver environment features a static maze that the agent learns to solve as fast as possible. The agent observers the environment using raycasts, moves by teleporting in 2 dimensions and is given a reward for getting closer to the goal.

3DBall: Physics Based Environments

The 3DBall environment features an agent that is trying to balance a ball on-top of itself. The agent can rotate itself and receives a reward every step until the ball falls.

BallShooter: Building Your Own Actuator

The BallShooter environment features a rotating turret that learns to aim and shoot at randomly moving targets. The agent can rotate in either direction, and detects the targets by using a cone shaped ray-cast.

Pong: Collaborative Training

The Pong environment features two agents playing a collaborative game of pong. The agents receive a reward every step as long as the ball has not hit the wall behind either agent. The game ends when the ball hits the wall behind either agent.

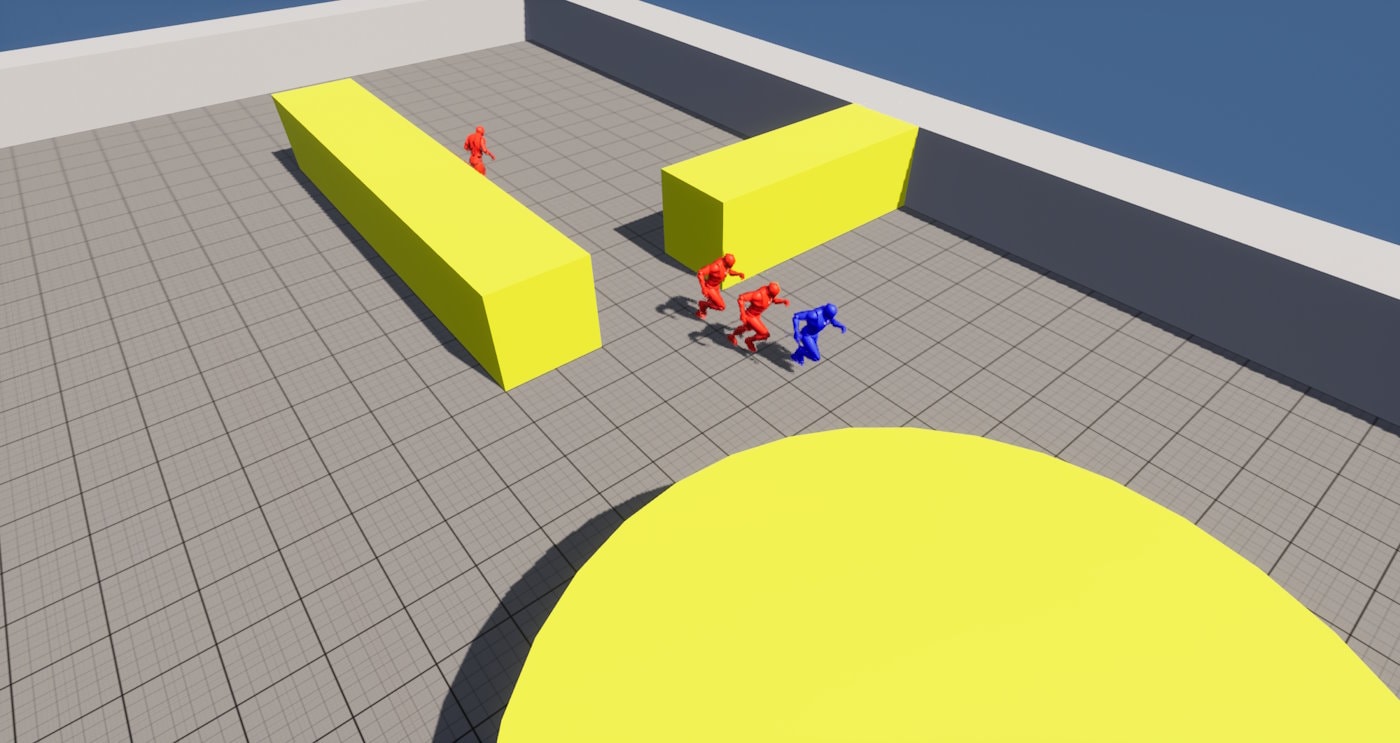

Tag: Competitive Multi-Agent Training

The Tag environment features a 3v1 game of tag, where one agent(the runner) has to run away from the other agents which are trying to collide with it. The agents move using forward, left and right movement input, and observe the environment with a combination of ray-casts and global position data.

RaceTrack: Controlling Chaos Vehicles with Schola

The RaceTrack environment features a car implemented with Chaos Vehicles, that learns to follow a race track. The agent controls the throttle, break and steering of the car, and can see it’s velocity and position relative to the center of the track.