AMD LiquidVR MultiView Rendering in Serious Sam VR with the GPU Services (AGS) Library

AMD’s MultiView Rendering feature reduces the number of duplicated object draw calls in VR applications, allowing the GPU to broadcast objects to both the left and right eyes in a single draw call for objects that would otherwise have to be rendered twice. This helps reduce CPU overhead, resulting in fewer dropped frames and improved rendering latency.

When we started working on single pass rendering, we first made it work with multiple GPUs because it was easier to implement and also had the greatest impact on performance. Unfortunately, most of our users don’t have multiple GPUs despite the huge benefit in VR rendering. Because of this, we planned on supporting MultiView Rendering on a single GPU. MultiView Rendering doesn’t really affect GPU workload, but it substantially reduces the CPU workload for rendering.

It was important for us to make it work with the same set of shaders and to make all supported VR rendering methods work as similarly as possible to minimize the number of bugs that only occur for one of the rendering methods but not for the others.

Using LiquidVR Affinity Multi-GPU, both eye images are rendered to the same viewport of the same render target, but one of them is on the main GPU and the other one is on the other GPU. After rendering, this image is copied to a different render target on the main GPU and passed to the VR system for display. In the case of single GPU rendering, we can’t have two images in the same viewport of a 2D render target, but we can use a render target array or a render target that contains both views side by side. We decided on using side-by-side rendering because we wanted to avoid compiling a separate set of shaders (we’d have to use Texture2DArray in MultiView Rendering instead of Texture2D) and because we wanted to experiment with MultiView for possible future implementation of MultiRes Rendering.

For MultiView Rendering on a single Radeon GPU, we used the AMD GPU Services (AGS) Library. Other than a couple of initialization functions, we only needed one function from the library: agsDriverExtensionsDX11_SetViewBroadcastMasks()

. To enable MultiView Rendering with two viewports on the same render target, we called:

agsDriverExtensionsDX11_SetViewBroadcastMasks(context, 0x3, 0x1, 0);

The second and third arguments are the most important since they select the viewport (0x3 – first two viewports) and render target slice masks (0x1 – only one slice).

When rendering, instead of setting a single viewport, we set two at the same time:

D3D11_VIEWPORT ad3dViewports[] = {

{fLeftX, fY, fHalfWidth, fHeight, fNear, fFar},

{fRightX, fY, fHalfWidth, fHeight, fNear, fFar}

};

pd3d11context->RSSetViewports(2, aViewports);

For single pass rendering with Affinity Multi-GPU, we used only a single constant buffer that was different for each GPU; all other data was the same, i.e. both GPUs had rendering data (matrices, viewer positions) for both eyes, but only one was chosen for rendering. This made it easier to add support for MultiView Rendering on a single GPU where we couldn’t have different values in the same constant buffer since all data is on the same GPU.

The only change needed to fetch the correct matrices for each eye with AGS was using AmdDxExtShaderIntrinsics_GetViewportIndex()

. Instead of the GPU index, we passed the command buffer through to both GPUs in Affinity Multi-GPU mode.

uint GetVREye()

{

// c_multiview is a constant passed to all shaders

return (c_multiview>0.0f) ?

AmdDxExtShaderIntrinsics_GetViewportIndex() :

c_eye;

}

This function returns the viewport index when MultiView is enabled and returns the eye constant when MultiView is disabled. The eye constant is either set for each rendering pass in multi-pass rendering or is uploaded to each GPU when single pass rendering in Affinity Multi-GPU mode. This index can then be used to fetch per-eye data in all shaders regardless of the rendering method used.

In MultiView mode, we used render targets that were double their usual width. From the perspective of the outside (i.e., higher-level) code, the render target had its original (half) size, e.g., a 256×256 render target would be created internally as 512×256 but when querying the texture size from outside the code, you’d get 256×256. This was done to minimize the amount of code that needed to be MultiView aware; only the shaders and lower-level rendering functions needed to know the actual texture size or the fact that we were even using MultiView Rendering.

When drawing to a render target, everything behaved the same as if we were rendering the scene in two passes, each time with a different viewport. For sampling a MultiView texture, we needed to sample the left half of the texture when rendering the left-eye view and the right half of the texture when rendering the right-eye view. To do that we adjusted the UV coordinates with a MAD (multiply-add) so instead of going from (0.0, 0.0) to (1.0, 1.0), they went from (0.0, 0.0) to (0.5, 1.0) for the left eye and from (0.5, 0.0) to (1.0, 1.0) for the right eye. How did we get different values for each eye? The answer is again AmdDxExtShaderIntrinsics_GetViewportIndex()

.

float4 GetMultiviewMAD() {

uint eyeIndex = AmdDxExtShaderIntrinsics_GetViewportIndex();

return float4(0.5f, 1.0f, 0.5f * eyeIndex, 0.0f);

}

To be able to use the same shader code for MultiView and non-MultiView rendering, we applied the MAD before sampling in all shaders which needed it, but we added a condition to this function so it returned (1.0, 1.0, 0.0, 0.0) when MultiView is disabled.

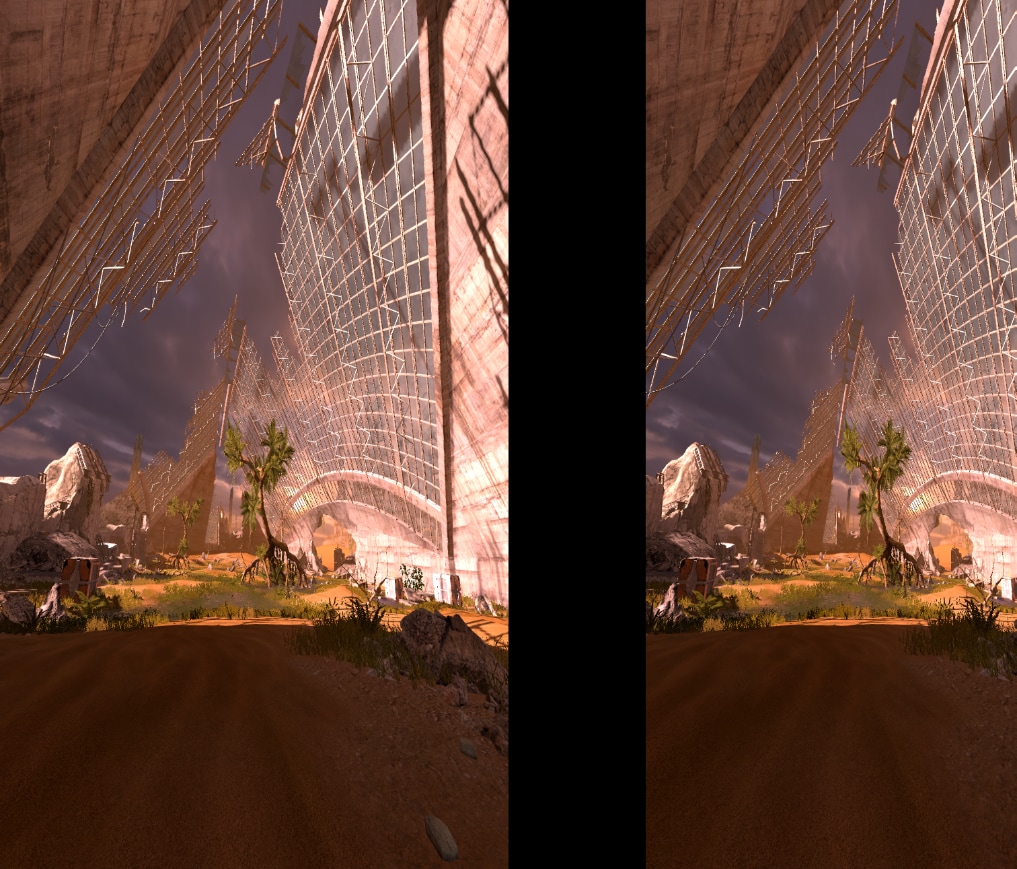

The most challenging aspect of adding MultiView support was going through all shaders and applying the MAD at the right place – these were mostly post-processing shaders since they often sampled a previously-rendered texture. In cases we missed some of them, the visual artifacts were often very hard to miss – the image rendered by those shaders were double in size and their aspect ratio was incorrect (since you will have sampled the entire image instead of only a single half):

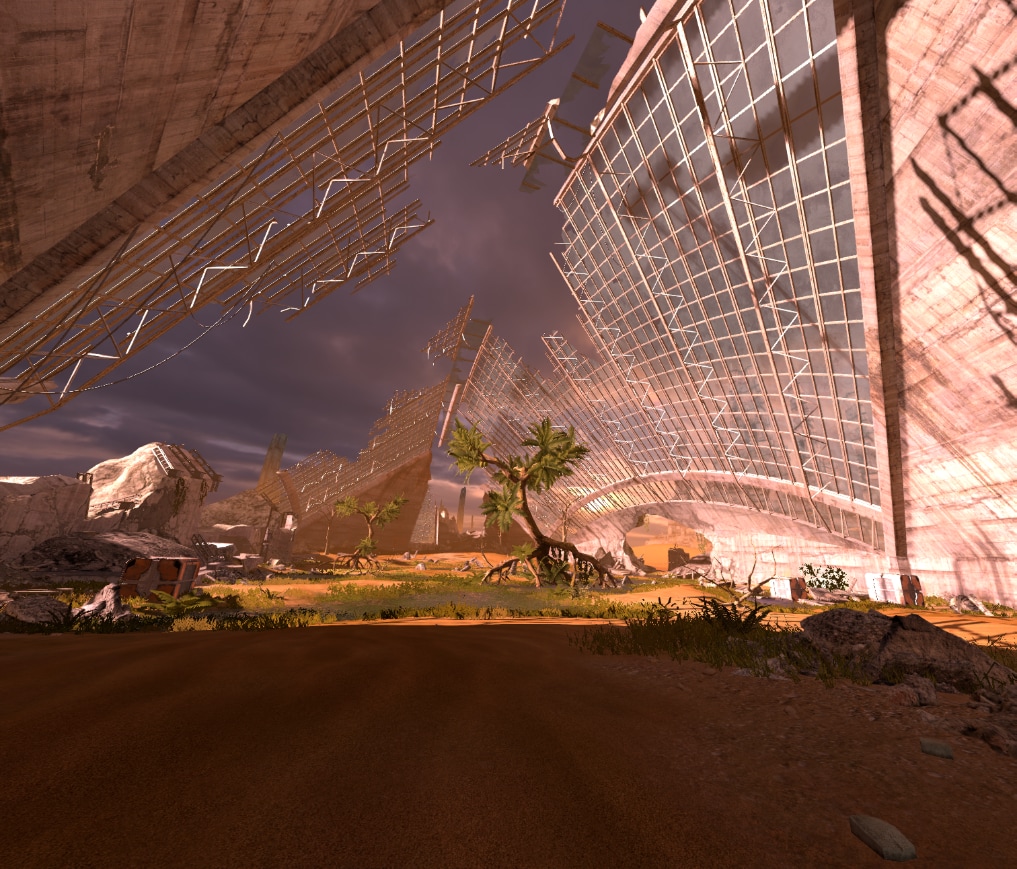

Here is a scene (left-eye view only) that is rendered correctly:

Here is the same scene, but with missing MultiView MAD in one of the post-processing shaders (FXAA):

Notice how the image is squished and parts of the right-eye view can be seen by the left eye. This problem is fixed by adjusting the UV coordinates before sampling the texture.

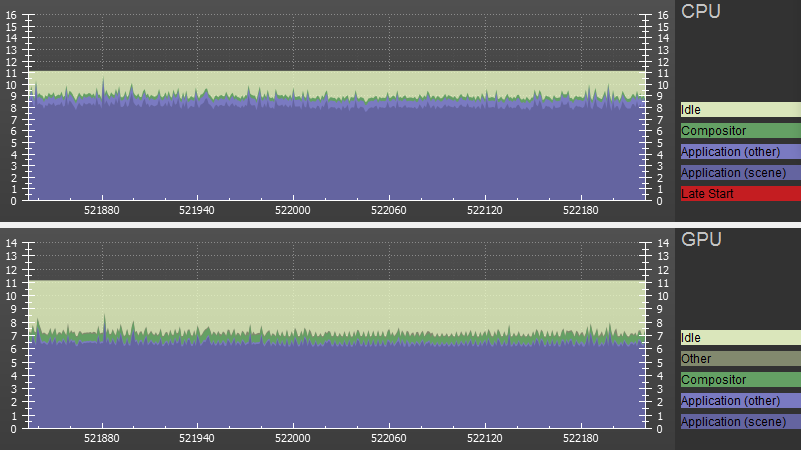

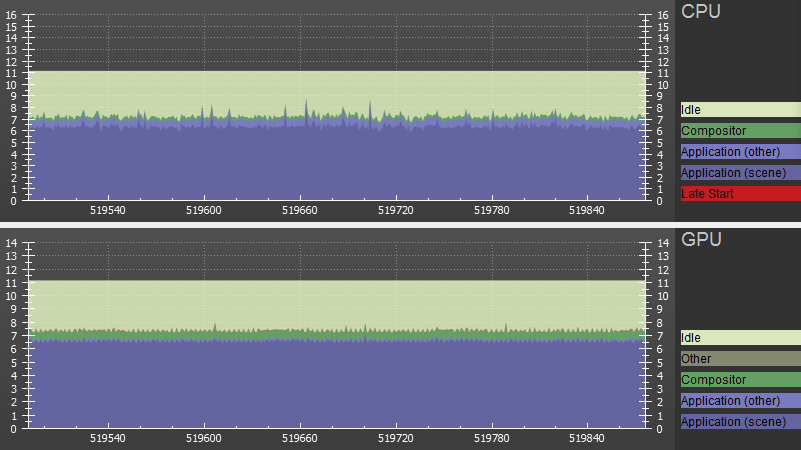

After fixing all the rendering errors, we can take a look at SteamVR frame timings from the game.

MultiView Rendering disabled:

MultiView Rendering enabled:

You can see that GPU frame time is almost the same in both cases (around 6.5 ms), but the CPU work decreased from 9 to 7 ms which is similar to what we’d get with LiquidVR Affinity Multi-GPU. The performance benefit in this case is less than double because our VR renderer is already fairly optimized (preparing rendering commands (in this case around 1 ms) and shadow rendering (2 ms) is only done only once per frame), but the main rendering pass is only done once per frame with AGS so we spend 2 ms processing the rendering commands instead of 4 ms.

Conclusion

Adding MultiView Rendering support to an engine that already supported LiquidVR Affinity Multi-GPU wasn’t that hard because we did plan ahead – we already had all the shaders set up for single-pass rendering before we started adding MultiView support. Going about it the other way around – adding support for MultiView first – would have had a greater initial hurdle, since we would have needed to tackle both single-pass and MultiView errors at the same time. On the other hand, once done, LiquidVR Affinity Multi-GPU support would be really easy to add.

Additional notes:

It’s important to make low-level functions for clearing or copying viewports MultiView-aware. If a part of the viewport is cleared, this must also be done for the right eye as well. Viewport copying needs to take into account if it’s copying from/to a MultiView texture and act accordingly.

Another thing to take into account is reading a pixel shader input with a SV_Position semantic. We need to be careful because it gives pixel center coordinates relative to the render target (not relative to the viewport) so the values for the right-eye view will be the coordinates of the right half of the texture. This is not unique to MultiView Rendering but our incorrect assumption about the returned value range caused a few rendering bugs.

Resources

LiquidVR™

LiquidVR™ provides a Direct3D 11 based interface for applications to get access to the following GPU features regardless of whether a VR device is installed on a system.

AMD GPU Services (AGS) Library

The AMD GPU Services (AGS) library provides software developers with the ability to query AMD GPU software and hardware state information that is not normally available through standard operating systems or graphics APIs.

Other guest posts by Croteam

Implementing LiquidVR™ Affinity Multi-GPU support in Serious Sam VR

This guest post by Croteam’s Karlo Jez gives a detailed look at how Affinity Multi-GPU support was added to their game engine.