Using AMD Freesync™ Premium Pro HDR Tone Mapping

In part two of this tutorial, we cover the terminology of tone mapping, what tone mapping is, as well as different monitor features that influence how well a tone mapper will work.

This is the first in a series of four tutorials covering different topics related to AMD FreeSync™ Premium Pro HDR Technology (FreeSync HDR hereafter!).

We are going to start here with terminology as well as what problems FreeSync HDR solves, and then in the later posts we’ll move to discuss tone mapping (part 2), gamut mapping (part 3), and how it all comes together (part 4) with code samples.

This section will be giving a brief overview of some terminology that will be used throughout this blog post series. This is by no means a comprehensive tutorial on color theory or color processing, but it should be enough to help you follow the rest of the series. For more detailed introductions to color theory, you should check out the links here and here.

The fundamental concept of color theory, and one we will be working with when we display our frames is a color space. A color space can be thought of as an n-dimensional space where colors can be uniquely represented as an n-dimensional point. Different color spaces allow easier modification of certain color properties. For example, one color space can allow easy mixing of colors that looks natural, while another color space can let you change the brightness of the color and nothing else.

The most commonly used family of color spaces in games is RGB, which are 3D color spaces where every color is represented by 3 values of red, green, and blue. In games, we often use the Rec709 and Rec2020 RGB color spaces with the difference between the two being how intense the primary colors are when their value is set to 1. For example, if I have a color with red = 1, blue = 0, green = 0 in Rec709, then it’s going to be less “red” than the same encoding in Rec2020 because at red = 1, Rec2020 represents a more intense red. This will become more important later when we talk about color gamuts. Games generally work in RGB color spaces because it allows natural mixing of colors which makes it easy to generate different color effects like accumulating light on surfaces.

Some color spaces are designed such that when we choose a color, the nearby colors within some radius are perceived as the same or similar. If a color space has this property, we call it perceptually uniform. One color space with this property that we will be using is CIELAB. In CIELAB, colors are encoded by L, a, and b values where L describes the colors luminance/brightness, while a and b describe the color’s chromaticity. You can think of chromaticity as the true “color” and luminosity is just how bright the color is. The perceptually uniform property of this color space will be used in post 3 when we describe a gamut mapping algorithm.

In color data visualizations used by color theorists, it is generally useful to visualize color data without brightness/luminosity. This can be done in CIELAB, but the results are usually highly irregular and so a color space called Yxy is often used instead. In this space, colors are encoded as a Y, x, and a y value, where Y represents luminosity, and x,y represent chromaticity (x,y are different from a,b in CIELAB). This color space is usually not used in games because there are more efficient color spaces where luminosity is easily modified, but this space is used a lot for visualization purposes as we will see once we investigate color gamuts. You can read more about the Yxy color space here.

When working with different color spaces, we need to have a method of converting a color from one space to another. We run into a problem when defining more color spaces since now each new space needs to define how to convert between itself and every other one. The solution color theorists came up with is to define a standard color space called XYZ which every other color space defines a mapping to. This means that if we want to convert a color from color space A to color space B, we could convert from A to XYZ, and then from XYZ to B. In XYZ, colors are represented as X, Y, and a Z value, where Y represents luminosity, but the X and Z values represent chromaticity and partially encode luminosity as well (they are not separate as in CIELAB and Yxy). You can read more about the XYZ color space here .

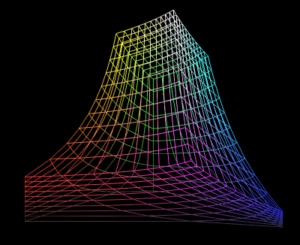

Previously we mentioned that RGB is a family of color spaces, each with different intensities of red/green/blue when a color has its primaries set to 1. If we limit our color space in RGB such that each color channel is between 0 and 1, then we get an RGB cube that we call a gamut volume for that RGB color space. Since Rec2020 has more intense red/green/blue values when they are equal to one than Rec709, the gamut volume for Rec2020 contains the gamut volume for Rec709.

We can therefore transform the gamut volume of an RGB space into another color space and compare how different gamut volumes look inside different color spaces. This is useful because different color spaces might have some desirable properties that we want to more easily compare, like for example how bright our gamut volume allows colors to be. As mentioned before, color theorists represent color data in Yxy’s gamut volume.

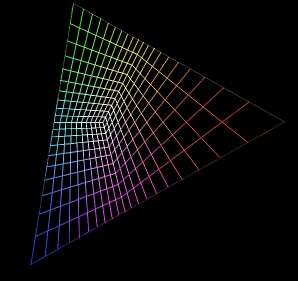

The reason is because in Yxy, we can project the gamut volume onto the chromaticity plane (x,y plane) to see how the range of “colors” represented by a gamut volume are, versus how bright the colors can be (the projected gamut volume is simply called a gamut). You can see in the images below the gamut volume in Yxy and the flattened transformation of the gamut.

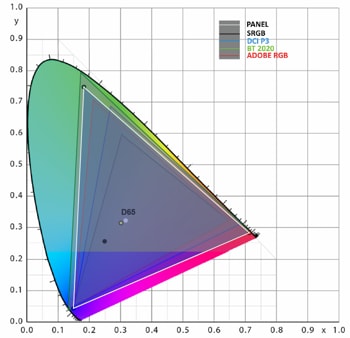

The different RGB color spaces like Rec709 and Rec2020 have a triangular gamut in Yxy, and we often visualize them in this space to compare how many more colors are represented in one gamut vs the other. Another benefit of visualizing color gamuts in Yxy is that color theorists have calculated the gamut for all human-perceivable colors in this color space. This way, we can compare how much of all perceivable colors are covered by any gamut, and avoid covering colors that are outside of the perceivable range. In the image below, we provide a visualization of the human eye’s gamut and the gamuts of different RGB color spaces.

The horseshoe shape with colors inside of it represents all the colors that are perceivable by the human eye (you can learn about how this shape is derived here and here). The different color gamuts are labelled by the different triangles that overlap the human-perceivable gamut. You can see that lots of colors which are perceivable are not covered by these standard color spaces!

The final important concept in color theory is how to build a color gamut given 4 chromaticity points. Chromaticity points are points in the x,y plane of the Yxy color space (the same space used for the above gamut diagram). We can define a color gamut by providing 3 vertices of the gamut triangle (the red, green, blue vertex) as well as a white point.

In the above image, you can see that the white point is called D65, but there are other choices of white points which could be used. For the purpose of this blog series, it’s not important to know the details of how white points work, but we will be using chromaticity points to construct color gamuts in the post on gamut mapping.

The reason it’s useful to build a color gamut given these chromaticity points is because it lets us define the gamut relative to the human eye’s perceivable gamut, and so we have a better idea of which perceivable colors are covered. You can find the math needed to build an RGB to XYZ matrix given the chromaticity and white points here.

In games, we want to find ways to efficiently encode colors such that we limit bandwidth and ALU operations while still preserving the precision of the colors we want to display. The current standard for standard dynamic range (SDR) displays is to encode 8 bits per color channel in an RGB color space, such that we have a total of 24 bits in RGB. Naively, we could assign an equal number of bits to bright and dark colors, but the human eye is more sensitive to changes in dark colors than bright colors, so we would be over representing colors we can barely tell apart, and under representing colors that we are more sensitive to.

And with only 8 bits per color channel to represent any given color, that particular problem becomes very noticeable, and so we need a remapping that assigns more of the available precision to dark colors. This remapping is called a gamma curve (sometimes called a transfer function), and it’s a non-linear curve that tries to map colors which are more perceptually different to wider bit ranges, solving most of the color encoding problem.

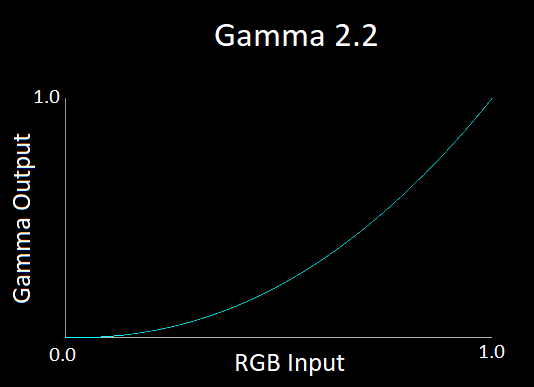

Normally, gamma curves only consider the human eye’s sensitivity to light, and so we end up with a different gamma curve for different gamut volumes. That’s because different gamut volumes have different brightness ranges which they can encode. For example, most Rec709 displays have a gamma curve that games commonly approximate using the gamma 2.2 curve shown in the image below.

This curve takes as input a primary color channel value between 0 and 1, and then maps it to a new color channel value between 0 and 1, but one where the darker colors get mapped to a larger range and therefore the brighter colors get mapped to a smaller range.

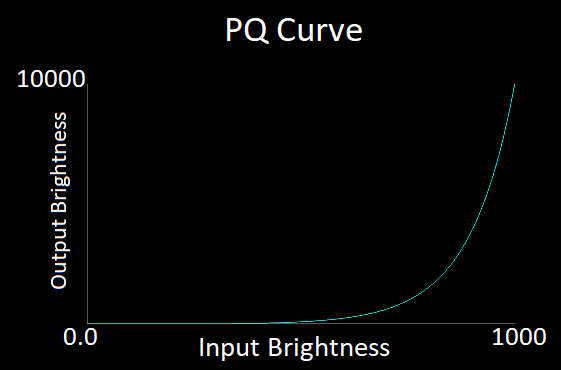

In HDR, the standard color formats use 10-to-12 bits per color channel, giving us more colors that can be encoded. Our HDR gamut volumes are also much larger too, so we still suffer the same problem and need to take care to consider how we map colors that are perceptually similar compared to ones that aren’t. In the HDR10 standard for example, instead of using a gamma 2.2 curve we use a PQ curve which can be seen in the image below.

The PQ curve can be applied per color channel just like the gamma curve, but it can also be applied only for the luminance of a color. If we choose to apply the PQ curve only to the luminance, we must encode our color in a space like Yxy, where one value represents luminosity and the others represent chromaticity.

You will notice that the PQ curve is much flatter than the gamma 2.2 curve. That’s because we are expecting color values that have a far wider range of luminance than we had before. The details for how the PQ curve works and was developed are not important for this set of blog posts, but if you want to learn more about gamma curves, transfer functions, and PQ, you can find more here and here.

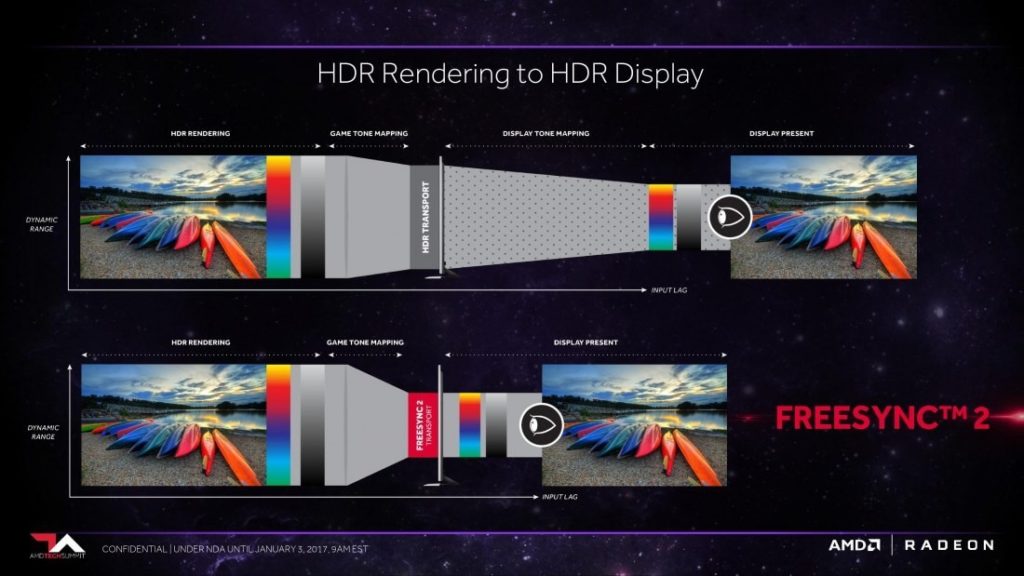

FreeSync HDR is an open standard developed in part by AMD in order to provide game developers with low latency HDR displays and allow games to utilize their full displayable color and brightness range. The major latency problem that is seen in current displays is that games tone and gamut map their frames to be rendered in a standard HDR color space, and the monitor then also tone and gamut maps these frames to its native color space, resulting in double the amount of work and longer latencies as can be seen below.

FreeSync HDR removes this latency by having the game do the tone and gamut mapping directly to the monitor’s native color space, leaving the monitor to just display the image without modification and thus lower latency.

One other issue with current monitors is that regular monitors tend to have filters and post processing applied to the game’s frame before it is displayed, and that post processing is not controllable or able to be disabled by the developer. These filters generally over-brighten or over-saturate the frame, making the game look different when rendered on different monitors.

This issue is solved by using FreeSync HDR’s display modes, which guarantee that the frame provided by the game will be displayed as-is, without any post-processed modification. The monitor also must pass an AMD certification process which verifies that colors are accurately displayed, resulting in a more consistent display of the game across different FreeSync HDR monitors.

Like with the original FreeSync standard, variable refresh rate is automatically enabled whenever the app is in full screen exclusive mode, but if we want to enable FreeSync HDR as well then during the game’s initialization the game needs to set the display mode it wants to use, and it needs to query the monitor’s color gamut/brightness range so that it can correctly tone and gamut map its frames to the monitor’s native range. We will be going over how the game can do tone and gamut mapping for FreeSync HDR, and the challenges that presents, in the next blog posts.

FreeSync HDR provides 2 new display modes called FreeSync2_scRGB and FreeSync2_Gamma22 , which provide the benefits discussed above while providing different ways of integrating FreeSync HDR into existing game engines. Below we will be going over what each display mode provides, when it should be used, and how to integrate it into a renderer.

This display mode was made to help ease developers that have a Rec709 pipeline into rendering in HDR without having to significantly change their color pipelines. The colors in the framebuffer for this mode are expected to be encoded in Rec709, but when displayed, if a color is outside of the Rec709 RGB cube but inside the display native’s color space, then the color will be correctly displayed (otherwise it will be clamped to the monitor’s native color space).

The framebuffer is expected to be stored in a R16G16B16A16 float format with no gamma curve applied on the colors and the channels of each color needs to be multiplied by the monitor’s dynamic range and then divided by 80.

This display mode was made to provide developers with more sophisticated HDR color pipelines easy integration of their pipelines with tone and gamut mapping into FreeSync HDR. The colors in the framebuffer for this mode are expected to be encoded in the display’s native gamut which are queried by the application, and if a color is outside of the 0-1 range then it will be clamped to be inside. The framebuffer is expected to be stored in R10G10B10A2 unorm format with a gamma 2.2 curve.

The goal of FreeSync HDR is to provide developers with low-latency HDR displays with variable rate refresh, as well as the ability to make their games display in a more consistent and accurate way. The approach that AMD has taken to do this is to provide FreeSync HDR display modes where the monitor displays the game without modification, and instead the developer is provided with information on what the monitor can and can’t display, allowing developers to modify their frames to be displayable inside of the monitor’s capabilities.

In the next post, we will go over how tone mapping is affected by FreeSync HDR and how to modify current tone mappers to take advantage of a FreeSync HDR monitor’s true brightness range.

A Beginners Guide to Colorimetry – medium.com

The difference between CIE LAB, CIE RGB, CIE xyY and CIE XYZ – wolfcrow.com

Basic Color Science for Graphics Engineers – wordpress.com