AMD Schola

AMD Schola is a library for developing reinforcement learning (RL) agents in Unreal Engine and training with your favorite python-based RL Frameworks.

Diffusion-based video enhancement feature in Topaz Video™ runs locally on AMD Ryzen™ processors with AMD RDNA™ 3 architecture-based AMD Radeon™ graphics and discrete AMD RDNA 3 and 4 architecture-based Radeon graphics cards.

AMD RDNA™ 3 and newer architecture-based GPU technology accelerating groundbreaking AI features.

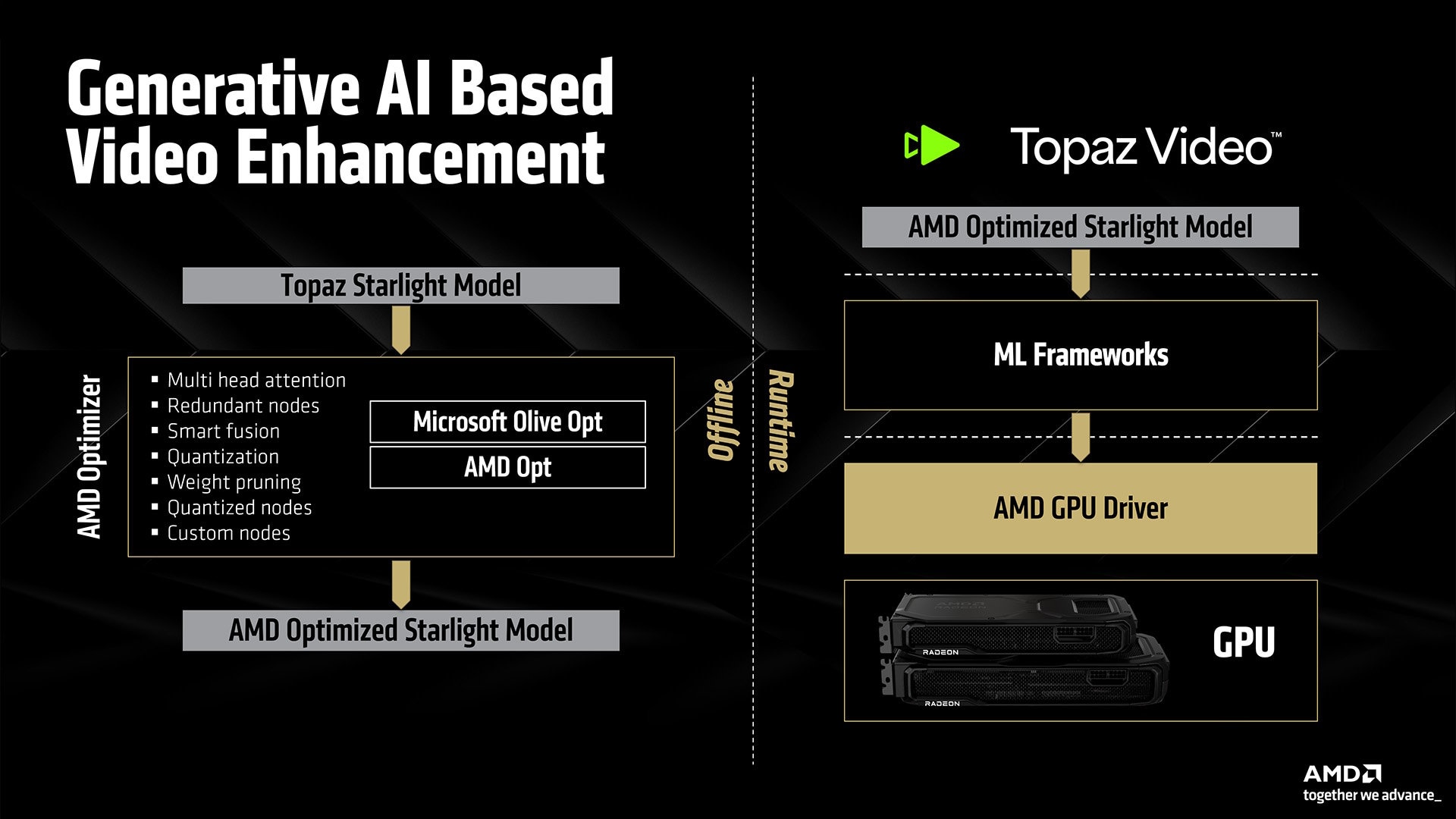

The collaboration between Topaz Labs® and AMD enabled the efficient deployment of AI-powered video enhancement models, meticulously optimized for AMD GPU architectures using AMD Optimizer toolchains. These optimizations maximize parallel processing throughput while minimizing latency for real-time performance.

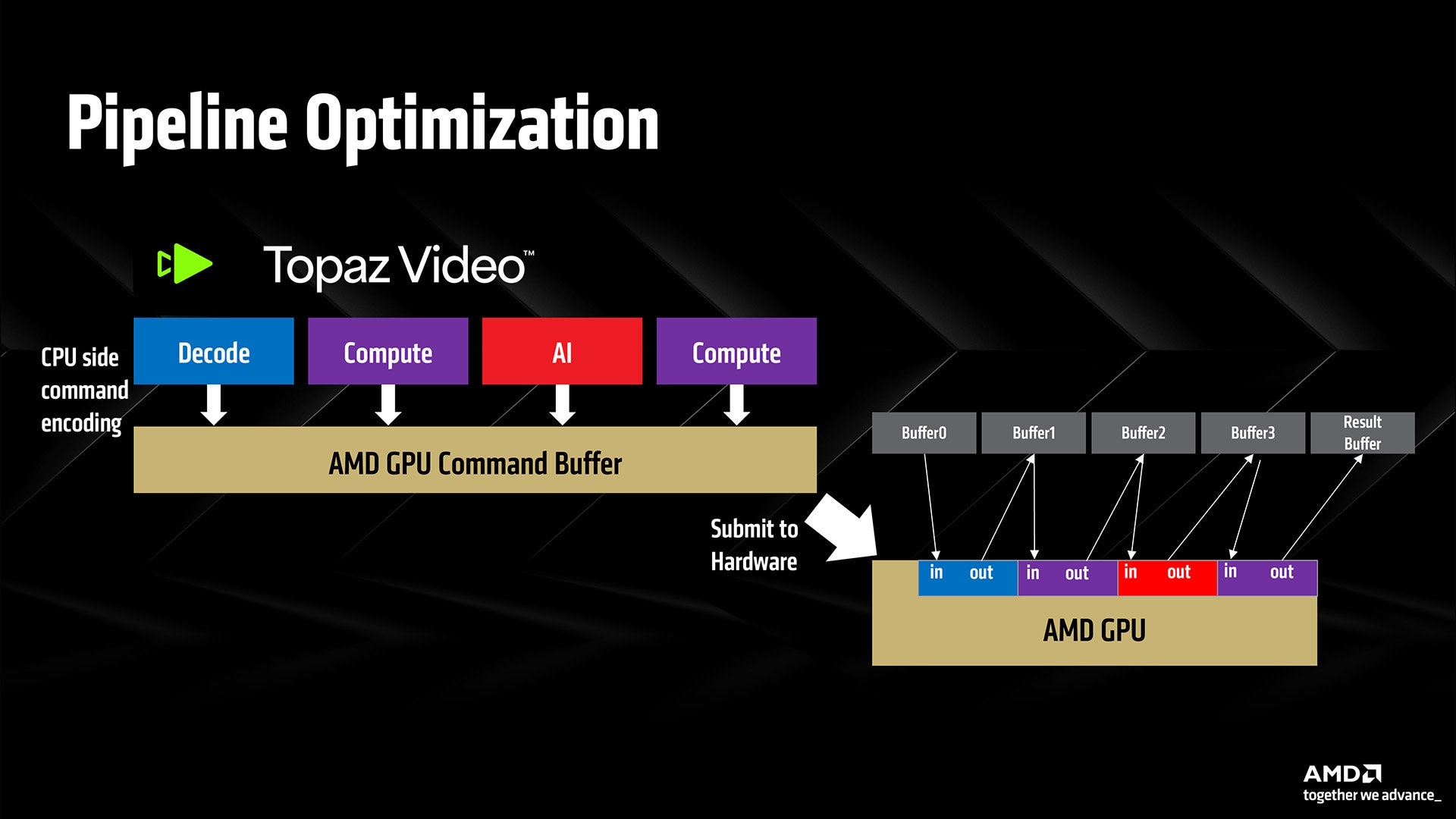

Through close engineering collaboration, the video editing pipeline was further enhanced with advanced GPU pipelining and buffer sharing techniques. These improvements significantly boost end-to-end responsiveness and deliver significant gains across the full video processing workflow.

Topaz Labs Starlight is a groundbreaking AI model by Topaz Labs that transforms low-resolution and degraded video into HD quality.

It’s the first-ever diffusion AI model for video enhancement and the only one to achieve full temporal consistency, ensuring frame-to-frame seamless motion. Users can access the model in Topaz Video – source: Topaz Video 7.0 - NEW Starlight (Local) AI Model - Topaz Video Releases - Topaz Community .

AMD partnered with Topaz Labs to bring these ‘video enhancement’ features mentioned above to client devices accelerated by AMD Radeon GPU technologies for both integrated and discrete graphics platforms. This cutting-edge model went through various optimization and transformation phases through AMD model optimizer tool chains, resulting in up to 3.3x performance improvement and significant memory reduction vs. the base model when running on AMD platforms [1][2].

The diagram below gives a brief overview of the process and software/hardware stack accelerating these use cases:

Fig1: optimization and runtime flow of deploying the Topaz Video enhanced model.

Fig1: optimization and runtime flow of deploying the Topaz Video enhanced model.

Topaz Labs’ groundbreaking AI research coupled with AMD Radeon GPUs optimized AI software and hardware capabilities made it possible to bring the technology to the masses via people’s local devices. The model utilizes AMD GPUs latest and greatest wavemma AI hardware accelerators to achieve performance for the features.

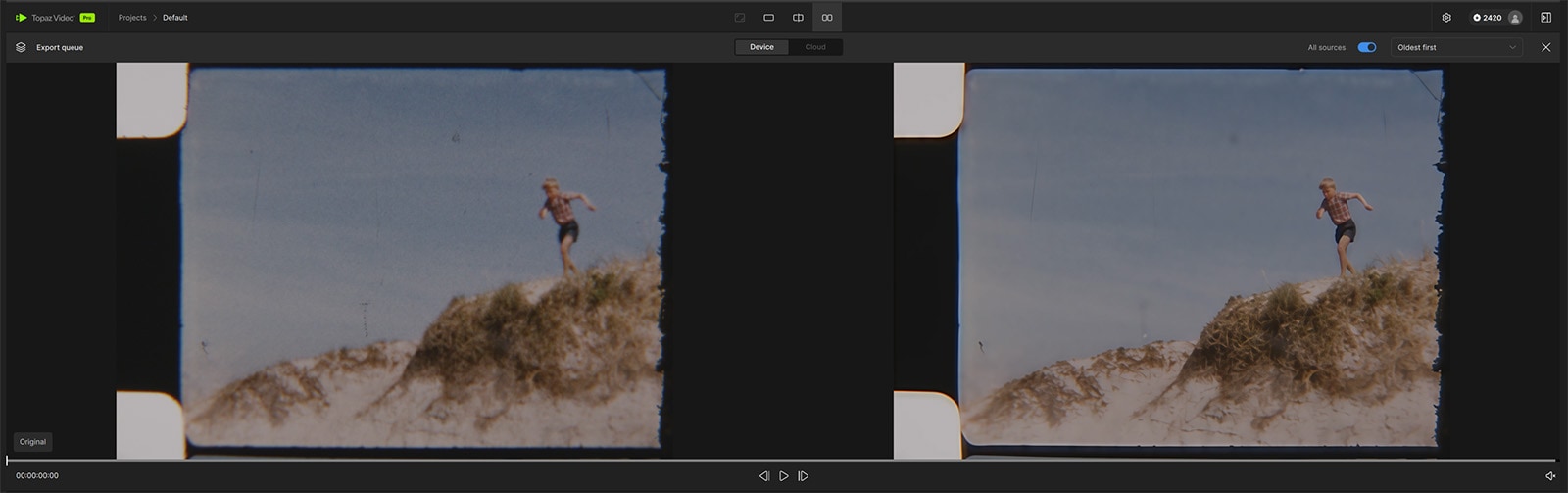

Fig2: Before and after screen capture of a Topaz Video enhancement feature.

Fig2: Before and after screen capture of a Topaz Video enhancement feature.

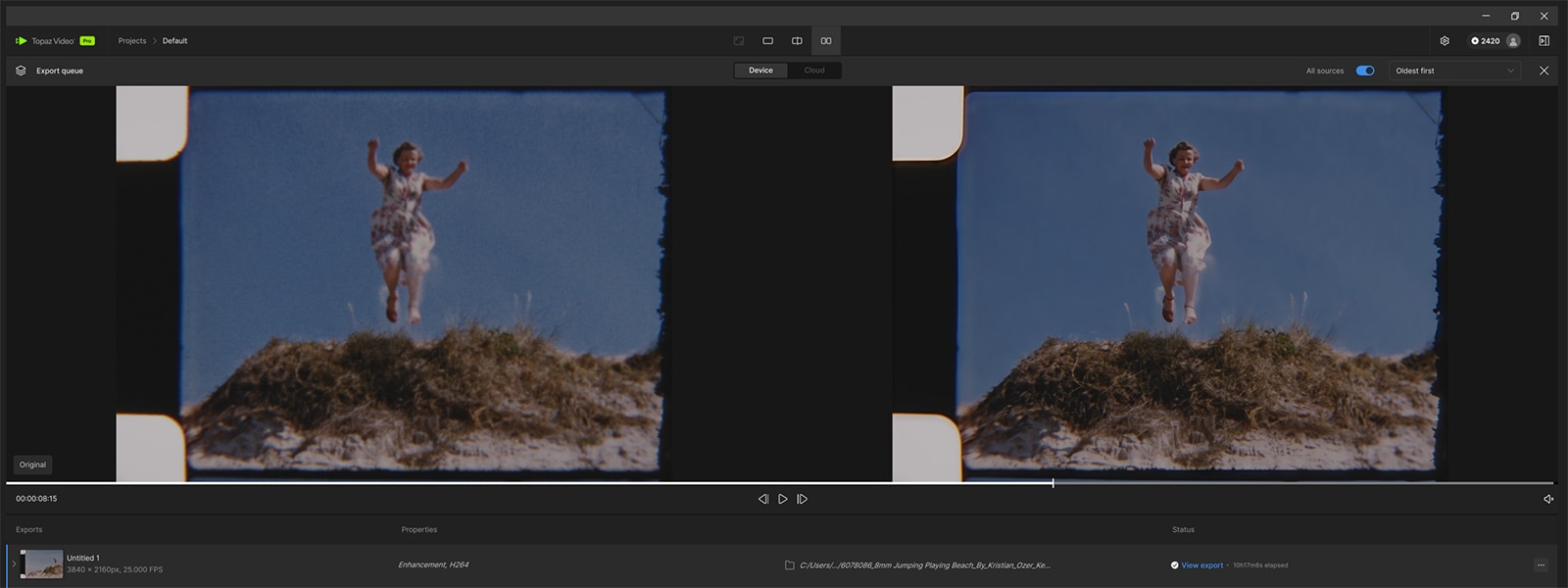

Fig3: Before and after screen capture of a Topaz Video enhancement feature.

Fig3: Before and after screen capture of a Topaz Video enhancement feature.

With the release of the AI-powered Topaz Video application, significant optimizations have been introduced across the video decode and AI computation pipeline. These enhancements streamline GPU execution by eliminating redundant memory copies, reducing costly resource interoperations, and minimizing CPU synchronization overhead. As a result, the full video processing pipeline sees substantial improvements in GPU utilization and responsiveness. These advancements will be available in future versions of Topaz Video AI, optimized specifically for AMD Radeon GPUs.

Fig4: End-to-end pipeline optimizations for Topaz Video AI.

Fig4: End-to-end pipeline optimizations for Topaz Video AI.

The collaboration between Topaz Labs and AMD exemplifies how deep integration between AI model innovation and GPU architecture can unlock transformative performance gains and real-time generative AI on consumer devices. By leveraging AMD Optimizer toolchains and advanced GPU pipelining techniques, developers can build on these advancements and deploy scalable diffusion-based video enhancement models with real-time responsiveness on consumer-grade AMD Radeon hardware.

This partnership not only demonstrates the power of AMD Radeon GPUs in accelerating generative AI workloads but also sets a precedent for future AI applications that demand high throughput and low latency. For developers, this is a call to explore and build upon these optimizations whether through custom model tuning, pipeline enhancements, or integration into broader AI processing workflows to push the boundaries of what’s possible in local AI-powered media applications.

[1] SHO-31: Testing as of April 2025 by AMD. All tests conducted using Amuse 3.0 RC and Adrenalin 24.30.31.05 Driver. Sustained performance average (elapsed time) of multiple runs using the specimen prompt: “Craft an image of a cozy fireplace with crackling flames, flickering candles, and a pile of cozy blankets nearby”. Models tested: Stable Diffusion 1.5, Stable Diffusion XL 1.0, SDXL Turbo, SD 3.0 Medium, Stable Diffusion 3.5 Large and Stable Diffusion 3.5 Large Turbo against Microsoft Olive conversion of the base model. ASUS ROG Flow Z13 equipped with an AMD Ryzen™ AI MAX+ 395 processor and 64GB of DDR5 8000 MT/s memory and Windows 11 Pro 24H2. Variable Graphics Memory set to 48GB. Performance may vary. SHO-31.

[2] STX-118: Testing as of April 2025 by AMD. All tests conducted using Amuse 3.0 RC and Adrenalin 24.30.31.05 Driver. Sustained performance average (elapsed time) of multiple runs using the specimen prompt: “Craft an image of a cozy fireplace with crackling flames, flickering candles, and a pile of cozy blankets nearby”. Models tested: Stable Diffusion 1.5, Stable Diffusion XL 1.0, SDXL Turbo, SD 3.0 Medium and Stable Diffusion 3.5 Large Turbo against Microsoft Olive conversion of the base model. ASUS Zenbook S16 equipped with an AMD Ryzen™ AI 9 HX 370 processor and 32 GB of DDR5 7500 MT/s memory and Windows 11 Pro 24H2. Variable Graphics Memory set to 16GB. Performance may vary. STX-118.

Links to third-party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites, and no endorsement is implied. GD-98

AMD Optimizer toolchains are currently not publicly available. To request access, please contact your AMD account representative directly.

Topaz Labs, Topaz Video and the Topaz Labs, Topaz Video logos are trademarks or registered trademarks of Topaz Labs.