Preface to the CPU performance optimization guide

Welcome to this blog series on CPU performance analysis and optimization methods. It will be updated regularly so please check back soon.

Before diving into the specifics, readers are highly recommended to familiarize themselves with the CPU hardware performance analysis tools and counters.

In this initial blog, we will first clarify some important concepts: what is performance, how to test performance, and how to achieve high performance. After answering the three questions, a clear path toward performance optimization will emerge for readers to follow. And subsequent blog posts will elaborate on the details. We hope this series proves valuable to your projects.

Chapter 1 – Overview of performance

High performance programs usually are meant to be faster, and the general criteria is speed. However, “faster” is a relative term. Merely being faster doesn’t always equate to better performance. In some cases, both compared programs may be poor in performance.

Also, efficiency and speed are related concepts, but they remain distinct from each other. Efficiency specifically pertains to the optimal utilization of resources without any wastage. It emphasizes the ability of programs to fully leverage hardware resources to their advantage.

Indeed, performance is tied to certain metrics. The most prevalent metric is speed, which directly reflects how quickly a program executes. Additionally, metrics such as IPC (Instructions Per Cycle) and CPI (Cycles Per Instruction) offer more stringent evaluations. However, it’s important to note that these metrics alone do not determine overall performance.

1.1. Latency

Latency is the execution time per unit number of instructions on the CPU. In general, low latency means that the program requires less reaction time. This metric is measured by the number of clock cycles per instruction (CPI), with lower values being preferable. Therefore, latency is undeniably one of the most critical criteria for evaluating performance.

1.2 Throughput

Throughput is the number of instructions executed by the CPU per unit of time. In general, a high throughput means that a program can perform more operations in the same amount of time. This metric is quantified by the number of instructions executed per clock cycle (IPC), with higher values being preferable. Hence, throughput is another crucial criterion for evaluating performance.

1.3. Power consumption

Clearly, programs also run on mobile devices, such as laptops and phones where power consumption becomes crucial. Generally, low power consumption means that the program is more energy efficient. And this is measured by power consumption. Therefore, power consumption is one of the important criteria for evaluation on mobile devices.

1.4 Stability

Program runtimes can vary. If the average speed is acceptable, attention may shift to occasional instances of high latency. Typically, a stable program operates without lagging. And this is measured by the variance of performance data and the highest latency data.

1.5. Summary

To conclude, performance is defined by various metrics and depends on the specific application scenario. A good program performs well in all scenarios and can strike a good balance between different metrics.

Chapter 2 – Performance test

A performance test is crucial before we conduct a performance analysis. Accurate results lay a solid foundation for all subsequent analysis. Performance metrics, crucial for drawing meaningful insights, come exclusively from performance test data and performance analysis tools.

2.1. Microbenchmarking

We should try to construct a reasonable and minimal benchmark test for the program. Testing the entire program for performance has the following drawbacks:

-

According to the Pareto principle, although only a small portion of the code may cause performance issues, it doesn’t mean that the rest of the program takes no time at all. Irrelevant code may also impact performance test results. For instance, the program might run for a considerable amount of time before reaching the exact code that needs analysis.

-

Furthermore, building the entire program can be time-consuming. For large projects, several hours of built time is quite common. Dealing with project dependencies is even more of a nightmare. It’s not wise to waste time on this.

Therefore, it’s essential to control the scope of testing, minimize sources of interference, and shorten build times. Creating a benchmarking program solely for the code of interest and conducting performance test and analysis against these benchmarks is much more feasible. This approach is what we refer to as microbenchmarking.

2.2 High-precision timer

With benchmarking, the easiest way to gather performance data is to run it and measure the time it takes for each run, where a high-precision timer is much needed.

The C++ chrono library provides some excellent timing tools that make it easy to measure the time between any two points in your program. By definition, std::chrono::steady_clock is best suited for timing measurements.

2.3. Performance analysis

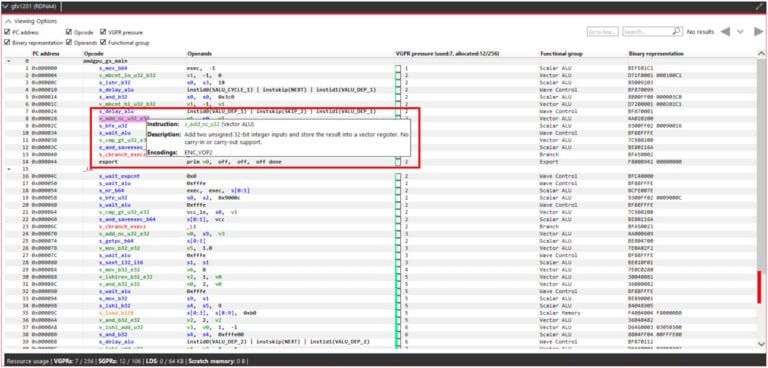

While timing statistics only offer a general picture, precise measurement requires a performance analyzer to pinpoint hot code segments and the CPU cycles they consume.

Most modern performance analyzers can track CPU hardware performance counters, or PMCs. These counters trace hardware events, allowing identification of CPU clock cycles and hardware-level performance issues in specific code segments.

Proficiency in using a performance analyzer necessitates familiarity with assembly language and PMCs. For example, AMD CPUs commonly use the AMD μProf analyzer. Detailed descriptions of PMCs can be found in the “Processor Programming Reference (PPR) for AMD.” You can search and download this reference from https://www.amd.com/. For instance, in the Processor Programming Reference (PPR) for AMD Family 19h Model 11h, Revision B1 Processors the section detailing PMCs is 2.1.13 Performance Monitor Counters.

Chapter 3 – How to achieve high performance

3.1. Assessing performance

Often developers make guesses about performance, but this is a very bad idea. Performance metrics can only come from test and analysis.

Sometimes there are vague statements, such as “use templates instead of macros” or “virtual functions are slower”. It is difficult to answer whether these are correct or not without testing the specific scenarios.

Sometimes the compiler may surprise us – the same logical code may generate completely different assembly code and the same assembly code may perform differently on different CPUs. So the most reliable sources for performance analysis are assembly and PMC. Don’t trust the compiler and CPU completely.

3.2 High performance

How to get high performance for your program? Below are the necessary skills:

-

Right algorithms

-

Effective use of CPU resources

-

Good memory access

-

No extra computing

-

Efficient use of programming languages

-

Testing and analyzing data

This series will demonstrate these skills in the upcoming cases. It will also explore hardware architecture and delve into the underlying aspects of programming languages. This approach will provide insights into code from the perspectives of compilers and CPUs, facilitating performance improvements.

Chapter 4 – Epilogue

CPU hardware is constantly changing, so is programming languages. This provides an opportunity for developers to optimize compilers. The journey towards better compiler and programming languages has no end. Understanding the underlying workings of computers and programs, mastering hardware utilization, and developing a standardized analysis process are crucial for adapting to these dynamic changes.

In this series, we’ll tackle the challenges commonly faced in practical projects. We’ll offer benchmark tests and comprehensive analysis procedures to assist developers in understanding how to effectively harness CPUs and programming languages. Additionally, we’ll introduce a reusable framework to streamline this process, making it easier to replicate and facilitating deeper learning.