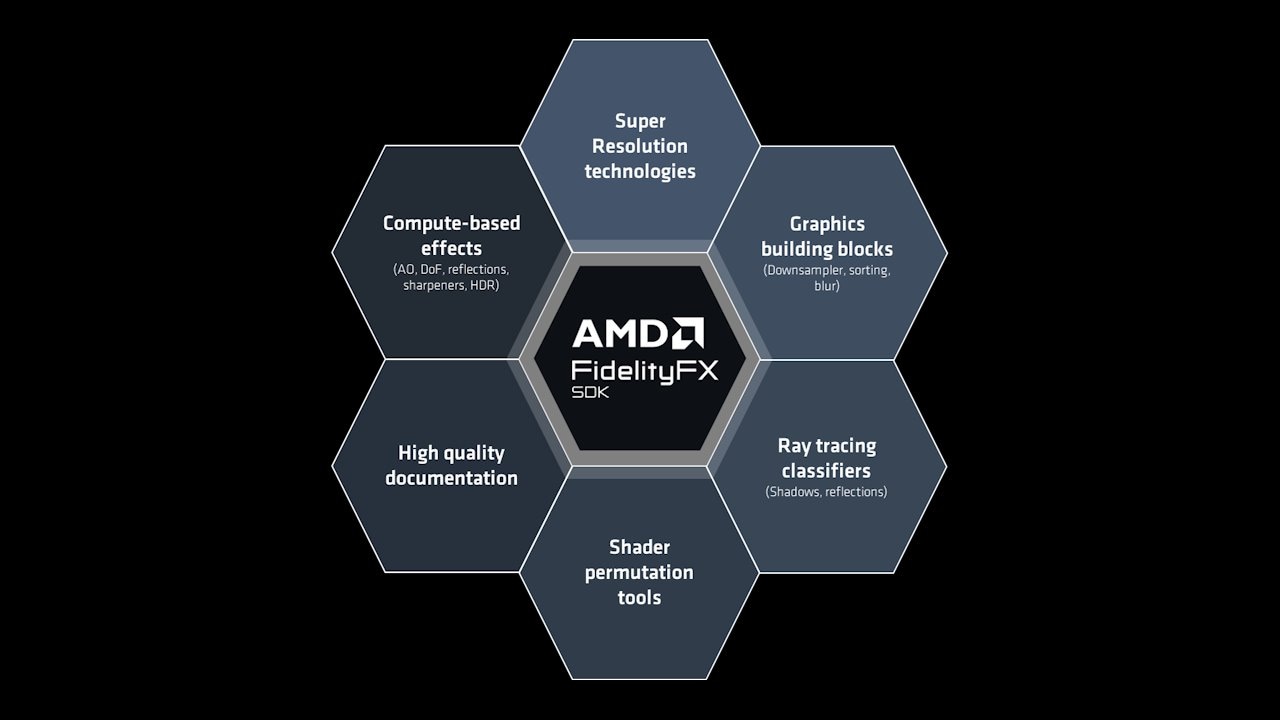

AMD FidelityFX™ SDK

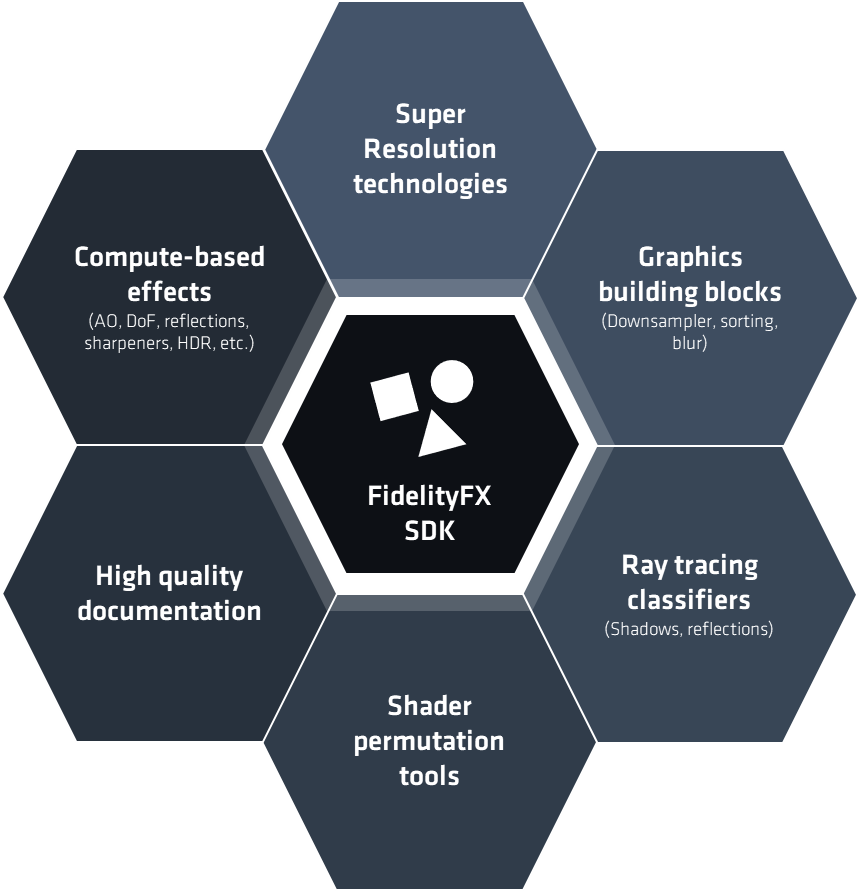

The AMD FidelityFX SDK is our easy-to-integrate solution for developers looking to include FidelityFX features into their games.

We were delighted to see so many of you at the Game Developers Conference this week, whether in meetings or at one of our many technical presentations. We appreciated your time, and we hope you gained some useful knowledge in return!

For those of you who couldn’t make it to GDC, while we missed seeing you there, you’re not going to miss out entirely. We’re sharing the videos and slide decks from most of our sessions on our GDC 2023 page, with the remaining ones to follow once they’re ready – so keep checking back!

We were very pleased to be invited to present at the Advanced Graphics Summit. Guillaume Boisse from our Advanced Rendering Research group introduced a solution for two-level radiance caching for fast and scalable real-time dynamic global illumination in games. Make sure you don’t miss the demo video of the solution on YouTube while you wait for the presentation to become available.

Many of our sponsored sessions were useful for developers who want to improve the performance of their games:

AMD Ryzen™ processor software optimization: [YouTube link] – [Slides – 2MB PDF]

This talk by CPU experts Ken Mitchell and John Hartwig covered modern AMD Zen microarchitecture, optimizations, frequent issues, and best practices to make games run faster, build quicker, and maximize end-user hardware.

Optimizing game performance with the Radeon™ Developer Tool Suite: [YouTube link] – [Slides – 6.3MB PDF]

Developers were taken on a tour through our powerful tools for investigating low-level GPU performance, visualizing GPU memory allocations and leaks, profiling ray tracing pipelines, and so much more. Our tools engineers Chris Hesik and Can Alper also revealed upcoming new features and improvements, and talked about their collaboration with LunarG to support DirectX® raytracing in GFXReconstruct.

DirectStorage: Optimizing load-time and streaming: [YouTube link] – [Slides – 2MB PDF]

There’s no doubt Microsoft® DirectStorage is a game-changer, and David Ziman is one of our experts on this topic! He explained how the technology works and how best to integrate it to get the most optimal load times and asset streaming performance in your games.

Our sponsored sessions weren’t just about knowledge-sharing, we also introduced some very exciting new changes and additions to our AMD FidelityFX™ technologies too!

It makes sense to set the scene with some of the highlights from our first sponsored session presentation where Jason Lacroix introduced the FidelityFX SDK.

[YouTube link]

[Slides – 2.5MB PDF]

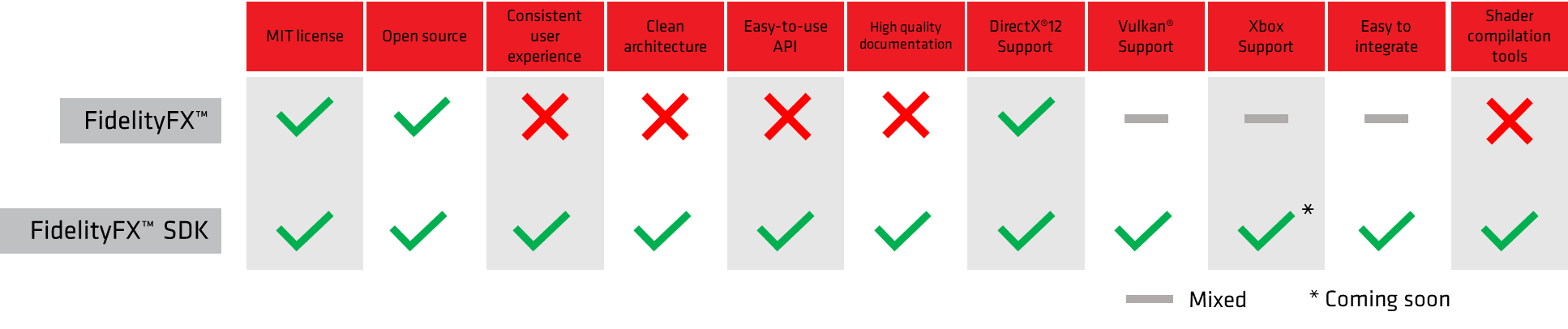

Our first FidelityFX technology came out nearly four years ago, with the very popular FidelityFX Contrast Adaptive Sharpening. Since then, our effects library has continued to grow, but with that growth, came growing pains too. Whether that was inconsistencies in coding and documentation standards, limited platform/API support – we’re developers ourselves, we knew we could and should do better.

The FidelityFX SDK is exactly that!

It’s our new easy-to-integrate solution for developers looking to include FidelityFX technologies into their games without many of the hassles of complicated integration procedures.

It could revolutionize your experience with integrating FidelityFX technologies, whether it’s one, or many – as it’s now so much easier to try out the others too with our new modular architecture. You can choose to integrate only the technologies you want and leave the rest behind.

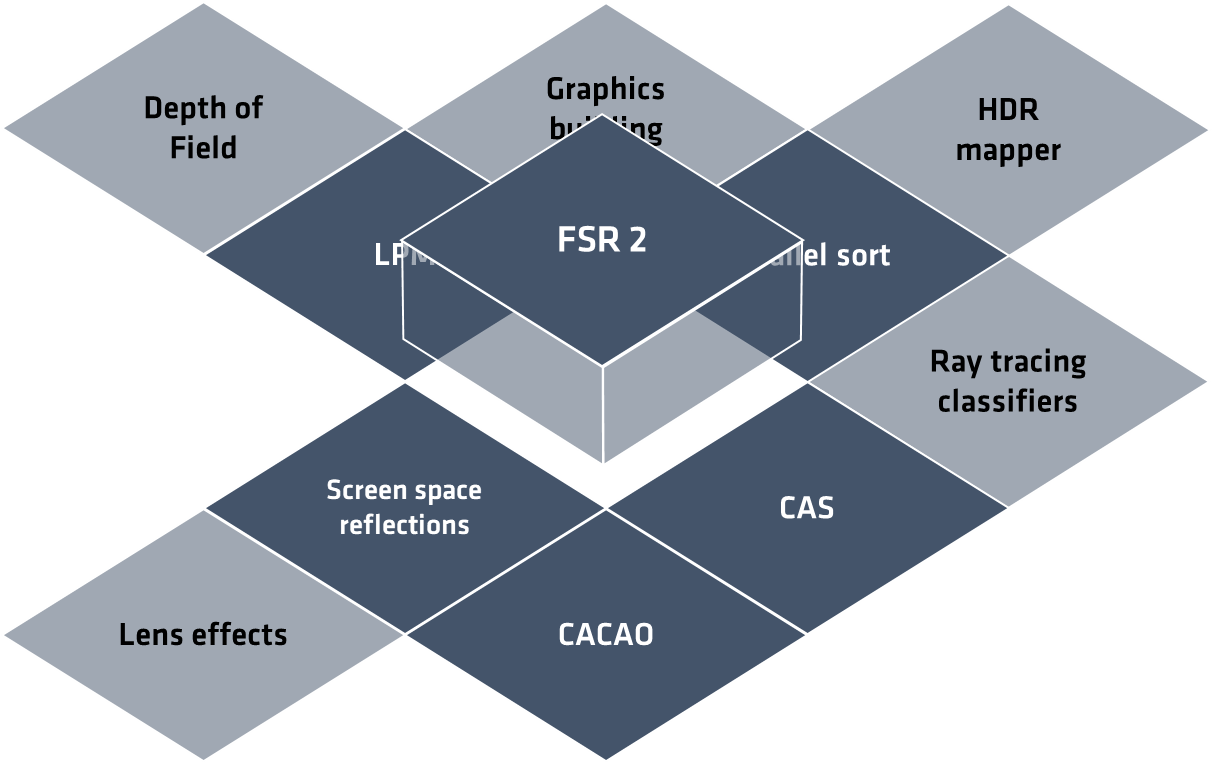

You’ll want to watch our video to find out more details, but we’ll quickly highlight some of the new FidelityFX technologies announced in FidelityFX lead Jason’s talk:

AMD FidelityFX Depth of Field provides physically correct camera-based DoF that is optimized for speed and uses tile-based classification to do fast path selection wherever possible.

AMD FidelityFX Lens is a highly optimized single-pass shader which supports some of the more commonly used lens effects in games. To begin with, it will support film grain, chromatic aberration, and vignette, with more expected to come later.

AMD FidelityFX Blur features a highly optimized set of blur kernels that can run from 3×3 to 21×21. It also provides a comparative diff tool to allow you to see the difference between FidelityFX blur kernel implementations versus other common blur approaches such as single pass box filters and more.

And finally, our existing and future hybrid ray tracing samples are being brought under the FidelityFX umbrella to keep things neatly together.

We’re really excited to launch the FidelityFX SDK, and can’t wait until you can start using it when we expect it to be available in Q2 2023. We’ll continue to develop and add new technologies – which leads neatly into:

[YouTube link]

[Slides – 2.2MB PDF]

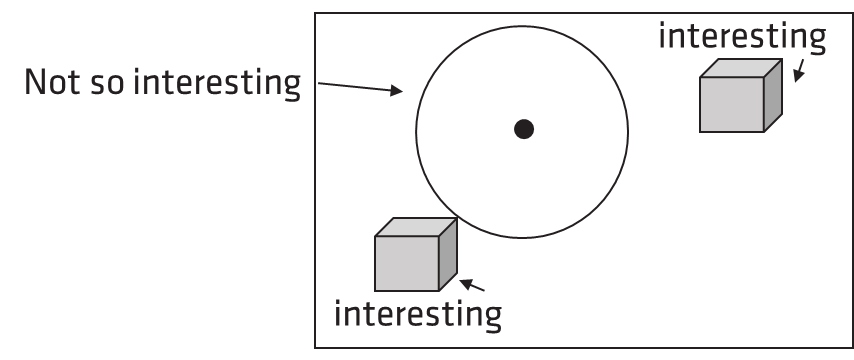

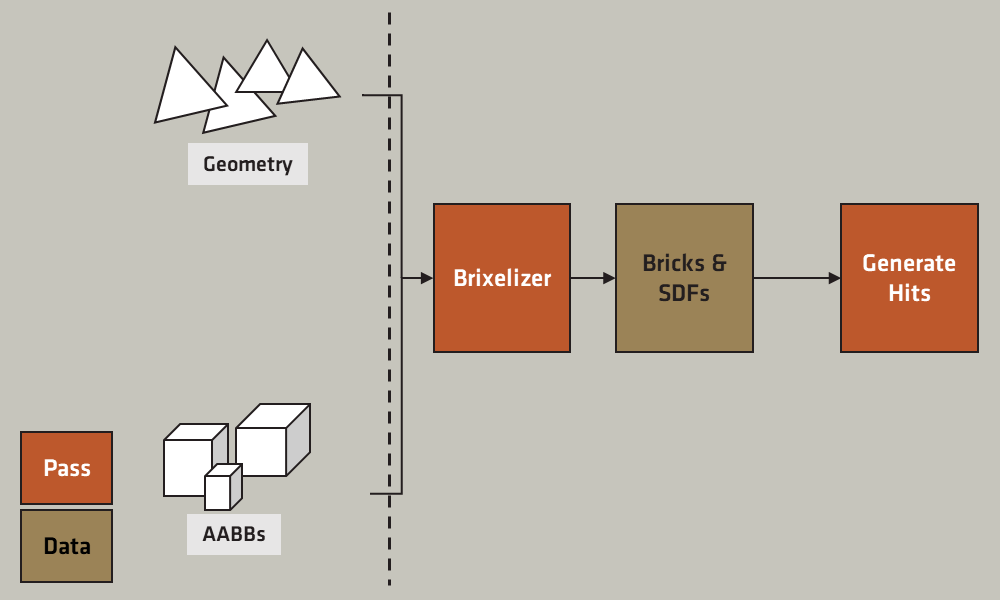

During our “Sparse Distance Fields for Games” session, our highly experienced DevTech engineer, Lou Kramer, introduced one of our expected future additions to the FidelityFX SDK – FidelityFX Brixelizer, AMD’s solution for real-time generation of sparse distance fields in games.

If you’re not sure what sparse distance fields are, Lou gives a great explanation in her presentation.

Brixelizer is a library that generates sparse distance fields in real-time for any given triangle-based geometry input. The geometry input is provided via index and vertex buffers, transform information, and a set of axis-aligned-bounding boxes (AABBs). Brixelizer then works its magic and outputs an SDF which can be used to generate the hit points of rays with the scene.

There’s so much more to FidelityFX Brixelizer than we can go into here. If you want the full details including how the method works, and how you might use Brixelizer to compute global illumination, make sure to check out Lou’s presentation.

There’s another expected addition to the new FidelityFX SDK in the future too, which was covered as part of our temporal upscaling session:

[YouTube link]

[Slides – 1.9MB PDF]

Core Technology Group engineer Stephan Hodes’ session was a journey through our FidelityFX Super Resolution technologies with a particular focus on temporal upscaling. He began by explaining our primary motivations and the workings behind our spatial upscaler, FSR 1. He then moved onto our temporal upscaling solution, FSR 2, sharing plenty of information on not just the initial development but also our subsequent FSR 2.1 and FSR 2.2 point releases and the problems they solved.

Along the way there were plenty of tips on integrating FSR 2, so if you’ve already got it in your game or planning on doing so, you won’t want to miss this presentation. The video will be coming shortly, but we do have the slide deck to share with you already.

During the last part of our temporal upscaling session, we also revealed some early information about FSR 3 and talked about the benefits and challenges in development**.**

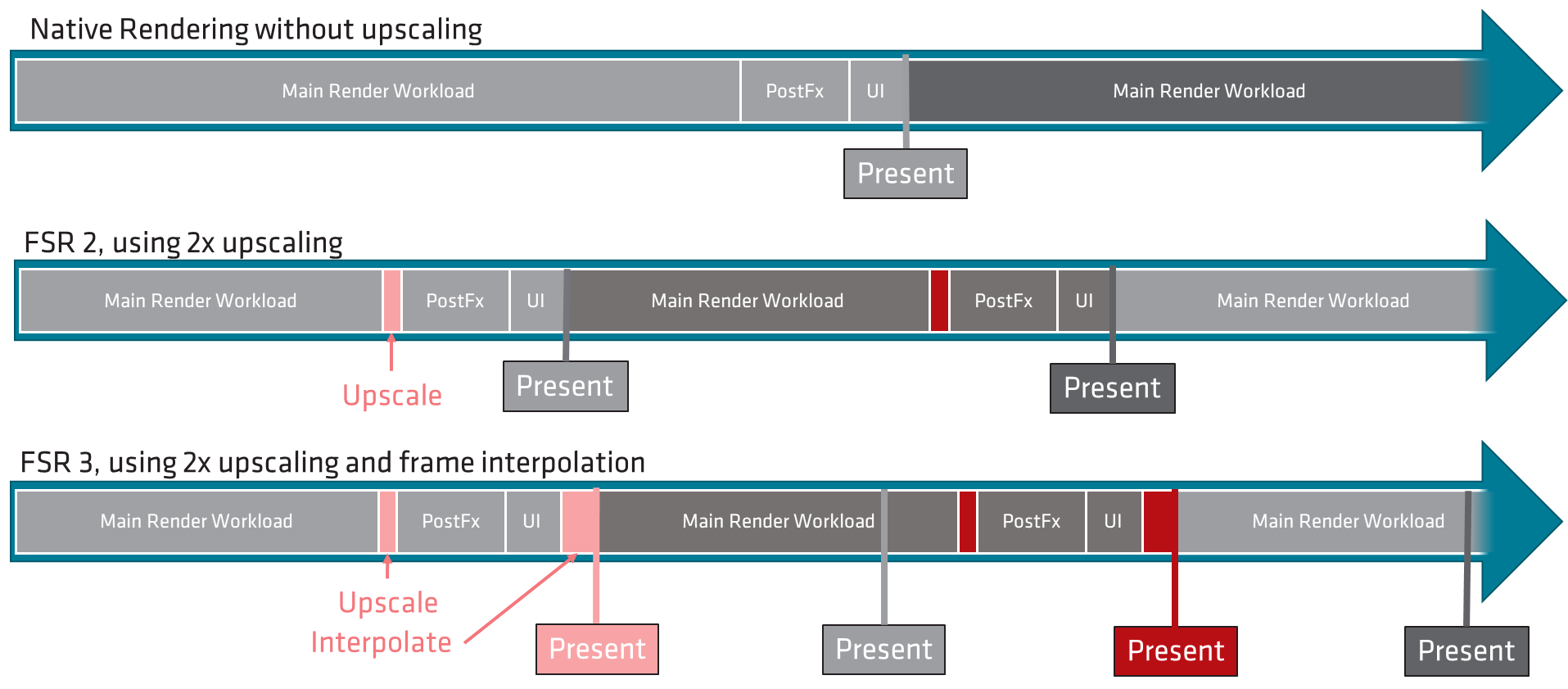

With FSR 2 we’re already computing more pixels than we have samples in the current frame, and we realized we could generate even more by introducing interpolated frames. This has allowed us to achieve up to a 2x framerate boost in the process.

Frame interpolation is more complex, as there are several challenges:

However, there is good news!

Here’s how rendering changes when we introduce FSR:

FSR 2 increases framerate and improves latency. Adding frame interpolation further increases framerate, but increases latency and reduces reactivity. Therefore we will be adding latency reduction techniques to FSR 3.

FSR 3 combines resolution upscaling with frame interpolation, and if you already have FSR 2 in your game, it is expected to be easier to integrate FSR 3.

As always with our FidelityFX technologies, FSR 3 is expected to be available under the open-source MIT license to allow optimal flexibility of integration.

If you want to read more, or you’re interested in our GDC activities from a gamer perspective, head over to AMD’s blog!

GDC may be over now for another year, but there’s still plenty to look forward to coming soon on GPUOpen – and we don’t just mean the new releases announced here.

Keep following us on Twitter and Mastodon or check back on GPUOpen regularly to find out about other new and exciting developments due very soon!