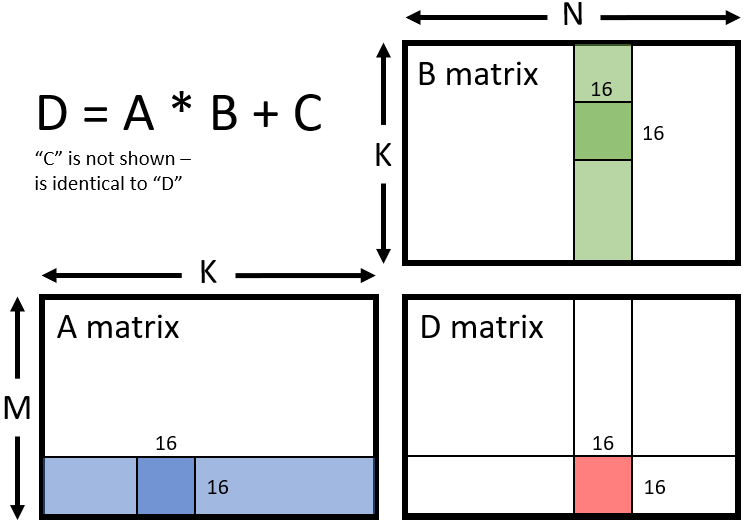

The new Wave Matrix Multiply Accumulate (WMMA) instructions added in HLSL Shader Model 6.8 allow shader developers to accelerate Generalized Matrix Multiplication (GEMM) matrix operations of the form:

WMMA instructions task all threads in a wave to collaboratively perform a matrix-multiply operation with higher efficiency and throughput than previously achievable using SM 6.7 or earlier instructions.

GEMM operations have many uses in in signal-processing, physics simulations, machine learning, and computer vision.

Some examples include:

- Performing Fast-Fourier Transforms for signal processing, such as audio, radio, or radar applications

- Applying filters and post-processing to images

- Calculating deformation of objects and fluids, such as fluid/physics sim or molecular simulation

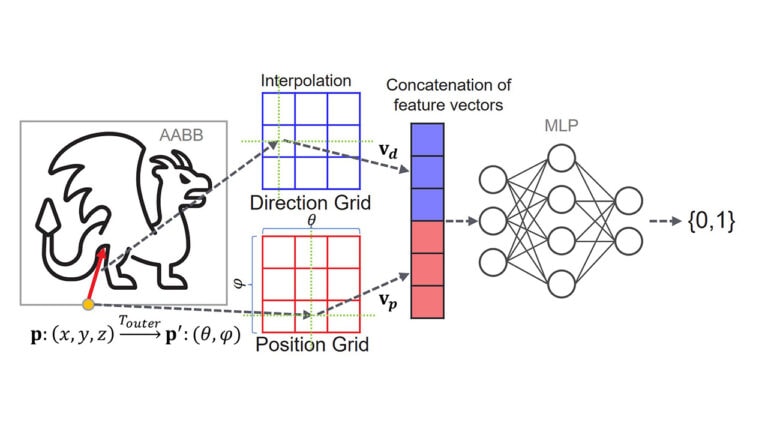

- Implementing deep learning operators – for example convolution, multi-layer-perceptron, neural radiance fields, and so on.

For more information on WMMA, see:

- Release notes for Microsoft® Agility SDK Preview Release v1.711.3 including support for WaveMMA.

- A preview AMD Software: Adrenalin Edition™ driver (includes the AMD implementation of the current WaveMMA specification on AMD Radeon™ RX 7000 Series graphics GPUs).

- rocWMMA on GitHub.

Find out more about WMMA and matrix cores here on GPUOpen

How to accelerate AI applications on RDNA 3 using WMMA

This blog is a quick how-to guide for using the WMMA feature with our RDNA 3 GPU architecture using a Hello World example.

AMD matrix cores (amd-lab-notes)

This first post in the ‘AMD lab notes’ series takes a look at AMD’s Matrix Core technology and how best to use it to speed up your matrix operations.