Input Records

Each Work Graph node can take Input Records, serving as parameters unique to each individual invocation of that node. As a very simple example, consider the following HLSL source:

RWByteAddressBuffer Output : register(u0);

struct InputRecord{ uint index;};

[Shader("node")][NodeLaunch("broadcasting")][NodeDispatchGrid(1, 1, 1)][NumThreads(1,1,1)]void BroadcastNode(DispatchNodeInputRecord<InputRecord> inputData){ Output.Store(inputData.Get().index * 4, 1);}Notice the introduction of a new semantic, DispatchNodeInputRecord<>, which instructs the shader compiler to retrieve this value from the (driver-maintained) queue of Input Records. In order to use this semantic with the provided “Hello World” sample, you also need to provide at least one Input Record. There are several options, but a straightforward way to accomplish that would be to modify the D3D12_DISPATCH_GRAPH_DESC populated in DispatchWorkGraphAndReadResults() with something like this:

struct InputRecord { UINT index; };

InputRecord record = { 0 };

# // dispatch work graph# D3D12_DISPATCH_GRAPH_DESC DispatchGraphDesc = { };# DispatchGraphDesc.Mode = D3D12_DISPATCH_MODE_NODE_CPU_INPUT;# DispatchGraphDesc.NodeCPUInput = { };# DispatchGraphDesc.NodeCPUInput.EntrypointIndex = 0;# DispatchGraphDesc.NodeCPUInput.NumRecords = 1; DispatchGraphDesc.NodeCPUInput.pRecords = &record; DispatchGraphDesc.NodeCPUInput.RecordStrideInBytes = sizeof(InputRecord);SV_DispatchGrid

Some semantics can be specified while declaring the contents of an Input Record. Of note, SV_DispatchGrid allows you to dynamically specify the number of thread groups required for a given dispatch of a [NodeLaunch("broadcasting")] node. Consider the following HLSL source:

RWByteAddressBuffer Output : register(u0);

struct InputRecord{ uint3 DispatchGrid : SV_DispatchGrid; uint index;};

[Shader("node")][NodeLaunch("broadcasting")][NodeMaxDispatchGrid(64, 64, 1)][NumThreads(1, 1, 1)]void BroadcastNode(DispatchNodeInputRecord<InputRecord> inputData){ Output.InterlockedAdd(inputData.Get().index * 4, 1);}Notice how the [NodeDispatchGrid(1, 1, 1)] attribute has been replaced by [NodeMaxDispatchGrid(64, 64, 1)]. This is because the grid size itself is now part of the DispatchNodeInputRecord<>. You could pair the above with something resembling the following declaration in DispatchWorkGraphAndReadResults(), assuming the modifications outlined in the Input Records section are already in-place:

struct InputRecord{ UINT DispatchGrid[3]; UINT index;};

InputRecord record = { { 2, 1, 1 }, 0 };This paradigm effectively replicates the process of dispatching a Compute Shader. It can be very useful, especially when porting existing algorithms to Work Graph form.

Note: Additional input parameters for the Work Graph function declaration may also be specified and labelled with the kinds of semantics graphics programmers are already familiar with from traditional Compute, like

SV_GroupIndex.

Output Records

While Input Records are a great way to transfer data from the CPU or from some prior workload into a Work Graph node, they can also be used to transfer data from one Work Graph node to another. This is done by combining Input Records with Output Records. Consider the following HLSL source:

RWByteAddressBuffer Output : register(u0);

struct InputRecord{ uint index;};

[Shader("node")][NodeLaunch("broadcasting")][NodeDispatchGrid(1, 1, 1)][NumThreads(1, 1, 1)]void FirstNode([MaxRecords(1)] NodeOutput<InputRecord> SecondNode){ ThreadNodeOutputRecords<InputRecord> record = SecondNode.GetThreadNodeOutputRecords(1); record.Get().index = 0; record.OutputComplete();}

[Shader("node")][NodeLaunch("broadcasting")][NodeDispatchGrid(1, 1, 1)][NumThreads(1, 1, 1)]void SecondNode(DispatchNodeInputRecord<InputRecord> inputData){ Output.Store(inputData.Get().index * 4, 1);}There are quite a few things to unpack here!

First, notice that FirstNode does not take an input record in this example. If you’ve been following along with some of the previous Building Blocks, you’ll want to make sure you also go back and remove the logic we’ve added specifying an InputRecord value in DispatchWorkGraphAndReadResults().

Now let’s examine the NodeOutput<> parameter to FirstNode. The name of this parameter must exactly match the function name of a target node that is declared elsewhere in this Work Graph (in this case, SecondNode). Also notice that the targeted node must specify a NodeInputRecord<> with the same template parameter as this NodeOutput<> parameter.

Next, there are two new functions we engage with inside the body of FirstNode: GetThreadNodeOutputRecords() and OutputComplete(). I think about GetThreadNodeOutputRecords() as allocating a block of memory to stuff data into, while OutputComplete() enqueues that block into a collection of Input Records for the targeted node to process. You’ll also notice GetThreadNodeOutputRecords() takes a second parameter, hard-coded to 1 in this example. This parameter is only useful in more sophisticated use-cases than presented here: see the section on Recursion to explore this in more detail!

Also notice the NodeOutput<> parameter is decorated with the [MaxRecords(n)] attribute. This constant represents the maximum number of Output Records that a single thread group will ever invoke OutputComplete() against. This attribute is correctly set to 1 in this example, but it will need to change based on the contents and intent of each unique shader. In this example, if you changed [NumThreads(1, 1, 1)] to [NumThreads(2, 1, 1)] in the FirstNode declaration, you would also need to specify [MaxRecords(2)].

Finally, pay attention to the fact that there are now multiple nodes in this Work Graph. Recall that while populating the D3D12_DISPATCH_GRAPH_DESC, we hard-coded NodeCPUInput.EntrypointIndex = 0. In a graph with multiple nodes, this assumption may no longer be adequate and should be re-evaluated. This block from Microsoft’s DirectX® 12 Work Graphs specification covers our use case nicely:

… when the D3D12_WORK_GRAPH_FLAG_INCLUDE_ALL_AVAILABLE_NODES flag is specified (opt-in), the runtime simply includes all nodes that have been exported from DXIL libraries, connects them up, and those that aren’t targeted by other nodes in the graph are assumed to be graph entrypoints.

We included the D3D12_WORK_GRAPH_FLAG_INCLUDE_ALL_AVAILABLE_NODES flag during State Object creation when we called WorkGraphDesc->IncludeAllAvailableNodes(). In this example, SecondNode is explicitly targeted by FirstNode. Under these conditions and according to the DirectX12 Work Graphs specification, FirstNode remains the only valid entrypoint and our assumption that it must be EntrypointIndex == 0 is still valid. See the Referencing Work Graph Entrypoints by Name instead of by Index section for thoughts on resolving this assumption more explicitly!

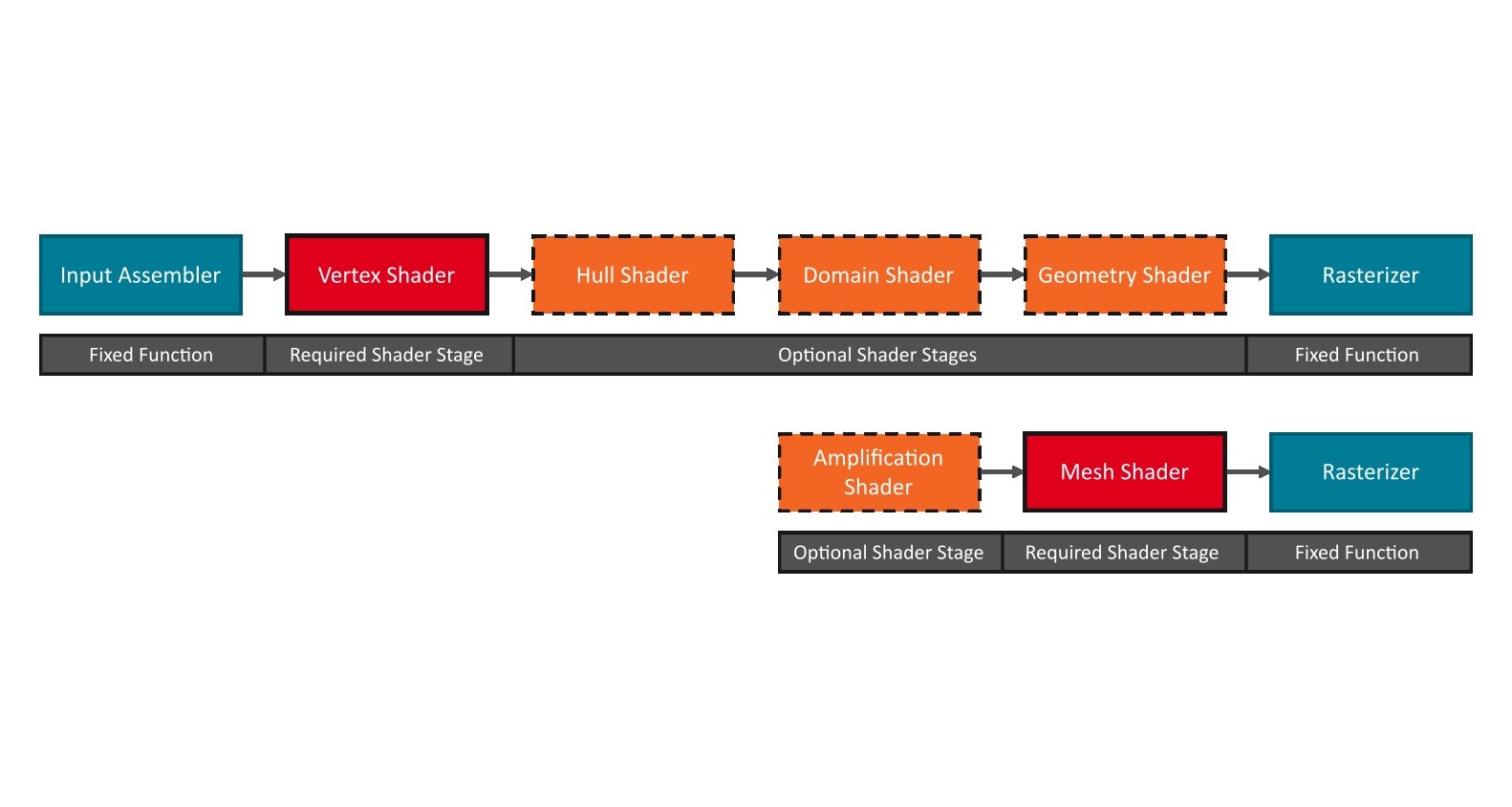

NodeLaunch Modes

All of the Work Graph nodes in all of the examples presented so far have used the same value for the [NodeLaunch()] attribute - "broadcasting". There are two other values for this attribute, "thread" and "coalescing", and these three options are collectively worth exploring in more detail. The distinction between these modes has to do with how many Input Records a thread group will receive and, perhaps more importantly… how many threads will process each Input Record.

Broadcasting

In a "broadcasting" node, the entire dispatch grid receives a single Input Record (and therefore all threads in a dispatched thread group process the same Input Record). Even though we’ve been looking at examples using "broadcasting" nodes, all of those examples have also been restricted to [NumThreads(1, 1, 1)]… so they haven’t really been good examples of why you might want to use a "broadcasting" node. A common use case for why you might prefer a "broadcasting" node is if the Input Records themselves don’t have any bearing on the work that needs to be done. For example:

- The Input Record is just an offset into an existing data structure provided as an UAV or structured buffer. In this case, copying the data into a record is not necessary.

- The Input Record provides an ID that is then combined with the thread ID to further index. For example, you provide a tile ID as the Input Record which is broadcast to all threads, and each thread calculates the specific offset using the dispatch thread ID.

Thread

A "thread" node is effectively the opposite of a “broadcasting” node. In a "thread" node, the thread group receives a single Input Record for every thread that executes (and every thread processes a different Input Record than every other thread in the thread group). A common use case for why you might prefer a "thread" node is if you have many similar pieces of data that all need to be processed in the same way… and the processing of a single unit of that data can also be efficiently handled by a single-threaded execution path.

Coalescing

"coalescing" nodes live in between these two opposites. A "coalescing" node takes a variable, user-specified number of Input Records per thread group (and uses a per-algorithm number of threads to process each Input Record). This flexibility comes with some overhead - "coalescing" nodes are slightly more complicated to use than their "broadcasting"- and "thread"- launched brethren. Consider the following HLSL source:

RWByteAddressBuffer Output : register(u0);

struct InputRecord{ uint index;};

[Shader("node")][NodeLaunch("broadcasting")][NodeDispatchGrid(1, 1, 1)][NumThreads(5, 1, 1)]void FirstNode( uint GroupIndex : SV_GroupIndex, [MaxRecords(5)] NodeOutput<InputRecord> SecondNode){ ThreadNodeOutputRecords<InputRecord> record = SecondNode.GetThreadNodeOutputRecords(1); record.Get().index = GroupIndex + 1; record.OutputComplete();}

[Shader("node")][NodeLaunch("coalescing")][NumThreads(32, 1, 1)]void SecondNode( uint GroupIndex : SV_GroupIndex, [MaxRecords(8)] GroupNodeInputRecords<InputRecord> inputData){ uint inputDataIndex = GroupIndex / 4; if (inputDataIndex < inputData.Count()) { Output.Store(GroupIndex * 4, inputData[inputDataIndex].index); }}"coalescing" nodes don’t receive a Dispatch|ThreadNodeInputRecords parameter, but rather take a GroupNodeInputRecords. It should always be annotated with [MaxRecords(N)], as the default is 1 and in that case you probably shouldn’t be using a "coalescing" node to start with. In the example above, we’ve set the input to 8, allowing up to 8 items to be queued up.

Notice that these values are interconnected: 8 * 4 = 32.

GroupNodeInputRecords<>: each thread group will receive no more than 8 Input Records.inputDataIndex: each group of 4 threads process the same Input Record.[NumThreads()]: each thread group contains 32 threads

Defining these kinds of relationships makes it common to use a traditional Compute semantic (in this case, SV_GroupIndex) in connection with “Coalescing” nodes.

Also, notice the use of the Count() function. The [MaxRecords()] annotation for the GroupNodeInputRecords is a maximum, not a guarantee. It is not safe to assume that every time a "coalescing" thread group is launched it will contain a full payload of Input Records, and you need to ensure that the data you want exists before you access it!

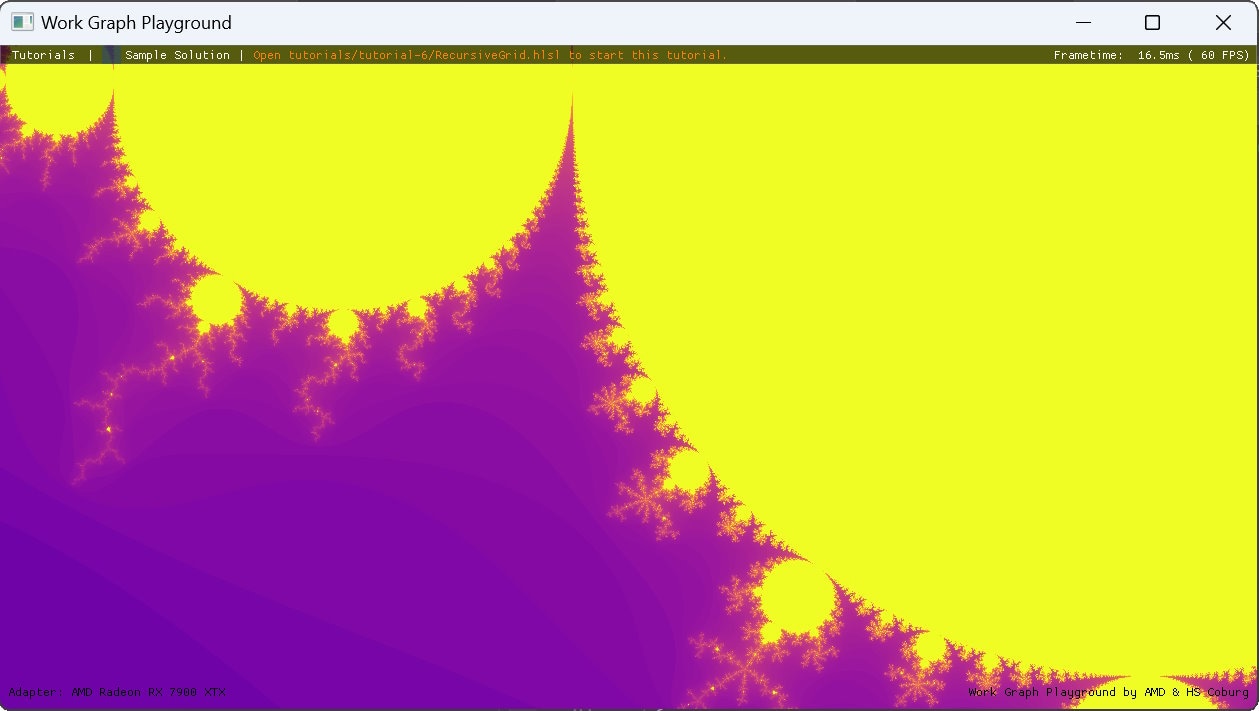

Recursion

In a Work Graph, recursion is the process of invoking OutputComplete() on an Output Record which targets the same node that invoked OutputComplete(), thereby instructing the graph to spin up another thread in the future which will execute the same logic currently executing on a potentially different input. GPU-based recursion can be a powerful tool for implementing looping algorithms or navigating certain kinds of data structures. Work Graph recursion can also be used to more directly implement existing patterns in which ExecuteIndirect is repeatedly (and worst-case) invoked from the CPU.

There are several limitations to be aware of when using Work Graph recursion. Consider the following HLSL source:

RWByteAddressBuffer Output : register(u0);

struct InputRecord{ uint depth;};

[Shader("node")][NodeLaunch("broadcasting")][NodeDispatchGrid(1, 1, 1)][NodeMaxRecursionDepth(5)][NumThreads(1, 1, 1)]void RecursiveNode( DispatchNodeInputRecord<InputRecord> inputData, [MaxRecords(1)] NodeOutput<InputRecord> RecursiveNode){ Output.Store(inputData.Get().depth * 4, GetRemainingRecursionLevels());

bool shouldRecurse = (GetRemainingRecursionLevels() > 0); GroupNodeOutputRecords<InputRecord> record = RecursiveNode.GetGroupNodeOutputRecords(shouldRecurse ? 1 : 0); if (shouldRecurse) { record.Get().depth = inputData.Get().depth + 1; record.OutputComplete(); }}First, notice the [NodeMaxRecursionDepth()] attribute attached to this node. All recursive nodes are required to specify this attribute, because there is an API-defined limit on how deep the execution of Work Graph nodes can proceed; in the Microsoft DirectX 12 Work Graphs specification this limit is 32.

The longest chain of nodes cannot exceed 32. Nodes that target themselves recursively count against this limit by their maximum declared recursion level.

This limitation is enforced during Shader Compilation when trivially possible, and again during State Object generation with greater context. Information about errors identified during these steps can be easily retrieved using the DirectX 12 Debug Layer.

Next, notice the usage of the GetRemainingRecursionLevels() intrinsic. While the DirectX 12 runtime can detect and prevent you from declaring an intent to exceed the depth ceiling… it can’t prevent the executing code from ignoring your declared intent and recursively exceeding the depth ceiling anyways. This intrinsic can be used as a safeguard against blowing your recursion budget, which in the (probably) best-case scenario will lead to a hung GPU… and in the worst-case scenario will lead to very obscure bugs.

Also notice that the looping structure of this recursion is very simple - it’s just a node targeting itself. That structure is important because this simplicity is the only usage permitted at the current time! From the Microsoft DirectX 12 Work Graph specification:

To limit system complexity, recursion is not allowed, with the exception of a node targeting itself. There can be no other cycles in the graph of nodes.

Finally, take a closer look at the invocation of GetGroupNodeOutputRecord(). Previously, we hard-coded a value of 1 as the second argument… but in this example we are passing in a variable. Conceptually, you can think about this pattern in these terms:

bool shouldRecurse = (GetRemainingRecursionLevels() > 0);

if (shouldRecurse){ GroupNodeOutputRecords<InputRecord> record = RecursiveNode.GetGroupNodeOutputRecords(shouldRecurse ? 1 : 0); record.Get().depth = inputData.Get().depth + 1; record.OutputComplete();}While the above code is maybe a little easier to understand, it’s also not valid! From the Microsoft DirectX 12 specification:

Calls to this method [GetGroupNodeOutputRecord()] must be thread group uniform though, not in any flow control that could vary within the thread group (varying includes threads exiting), otherwise behavior is undefined.

This limitation applies to all variations of Get{Group|Thread}NodeOutputRecord, including the thread, group, and array permutations. Decide whether you need to allocate an Output Record for this thread however you need to, and then pass that decision in as the second variable to Get{Group|Thread}NodeOutputRecord from a thread group-invariant scope. If you decide you didn’t need to allocate any members from an array of Output Records, pass in 0 instead of 1.

Finally, ensure that you only call OutputComplete() on a record that was actually allocated! It is not only legal to invoke OutputComplete() inside an if-block, but in fact you almost always should. Calling OutputComplete() on an Output Record that was not actually allocated results in undefined behavior, including GPU hangs.