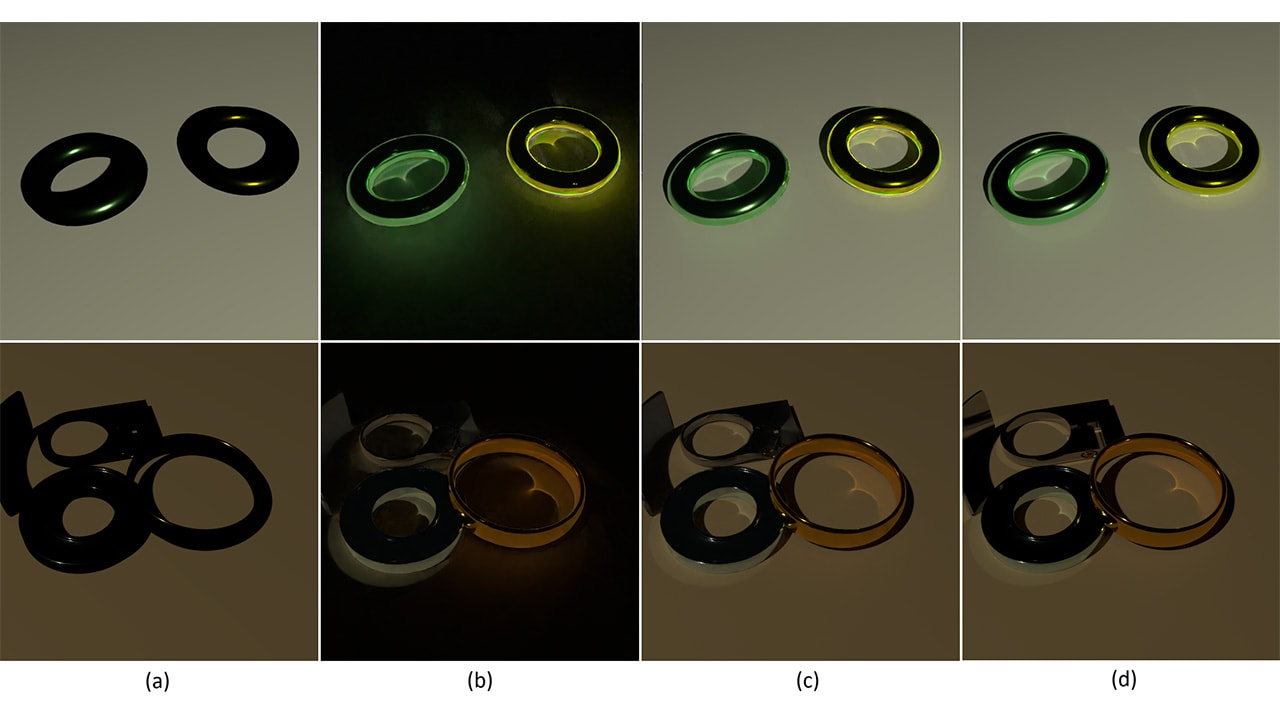

Announcing AMD FidelityFX SDK 2.0 with AMD FSR 4

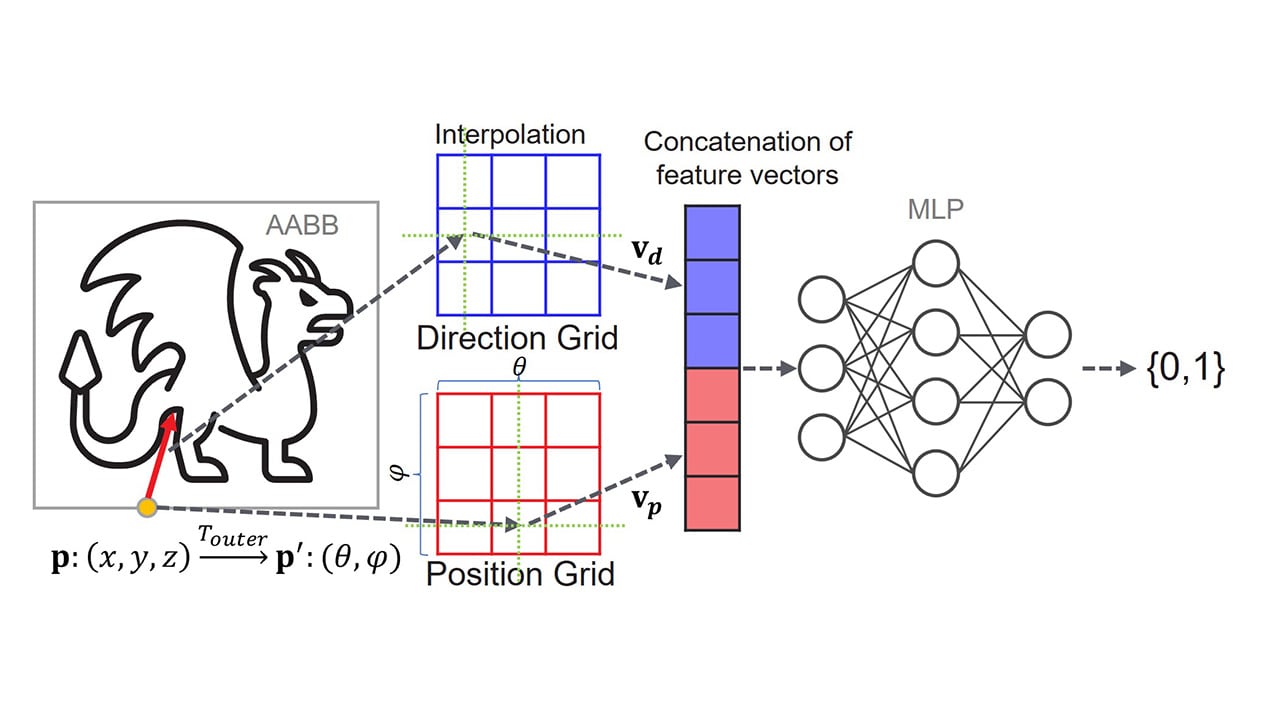

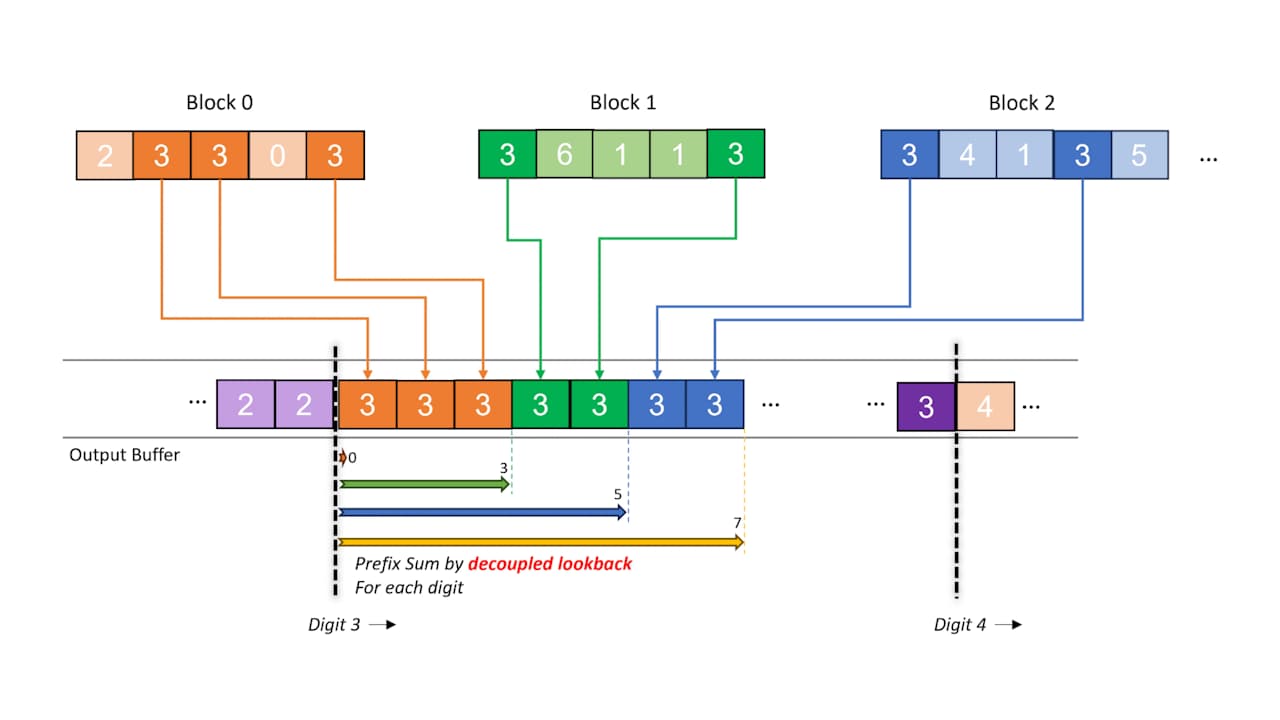

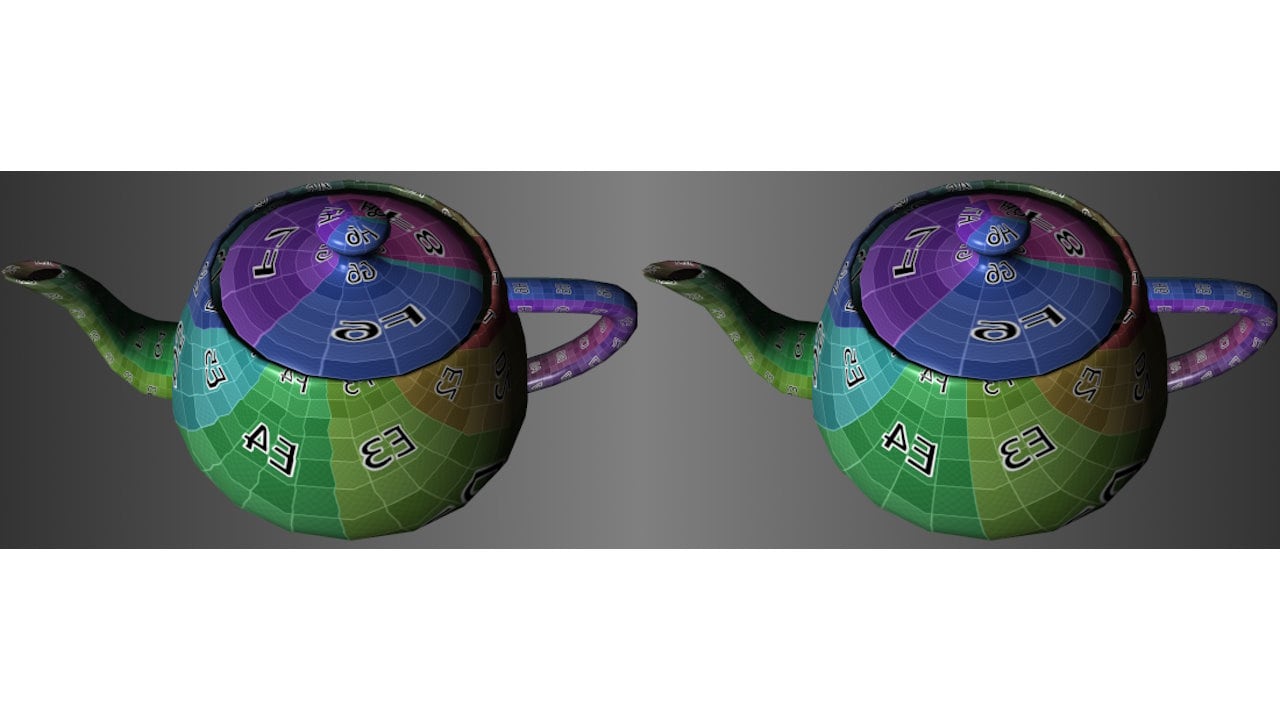

The launch vehicle for our ML-based rendering technologies is the just-released AMD FidelityFX™ SDK v2.

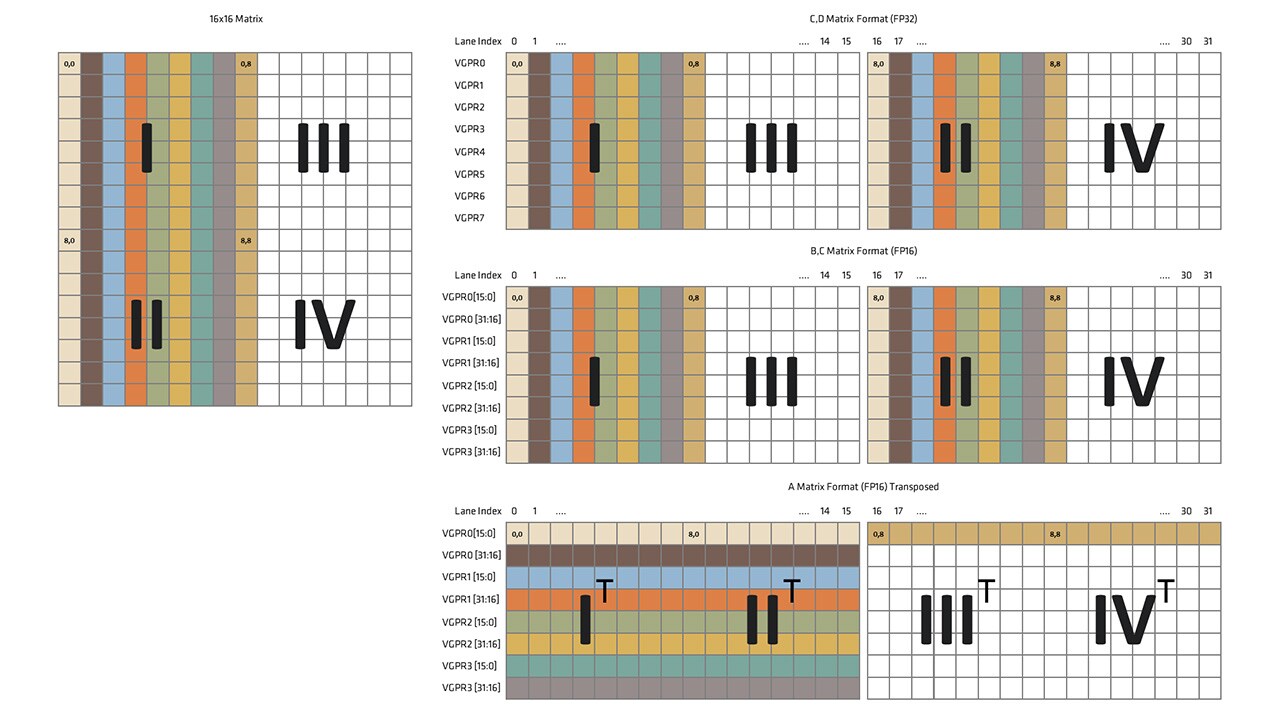

Integrating this update gets you our cutting-edge ML upscaler AMD FidelityFX Super Resolution 4 (FSR 4) which uses hardware-accelerated features of AMD RDNA™ 4 architecture.

Unreal® Engine 5 plugin also available.

Learn more >>